Thesis

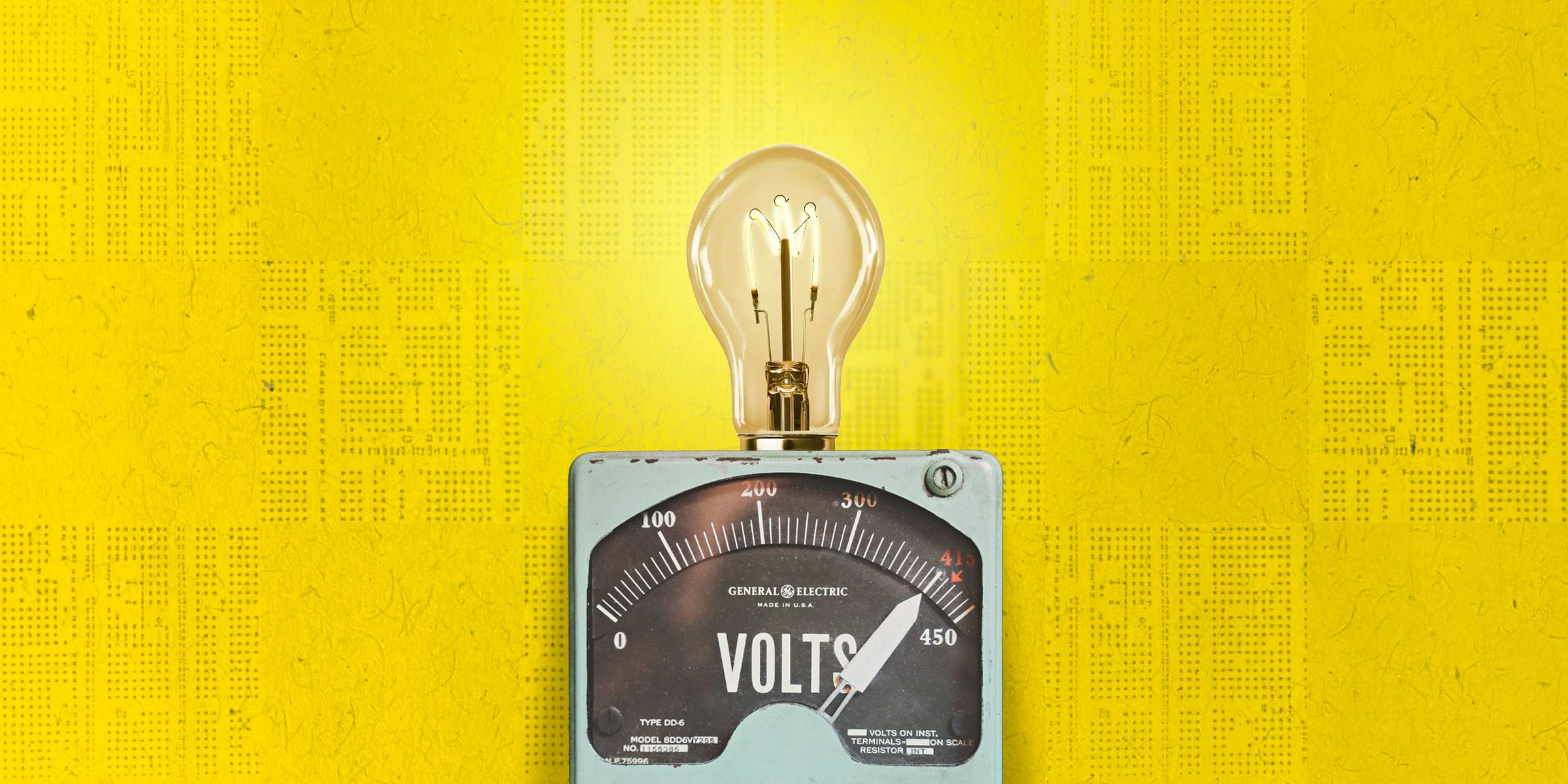

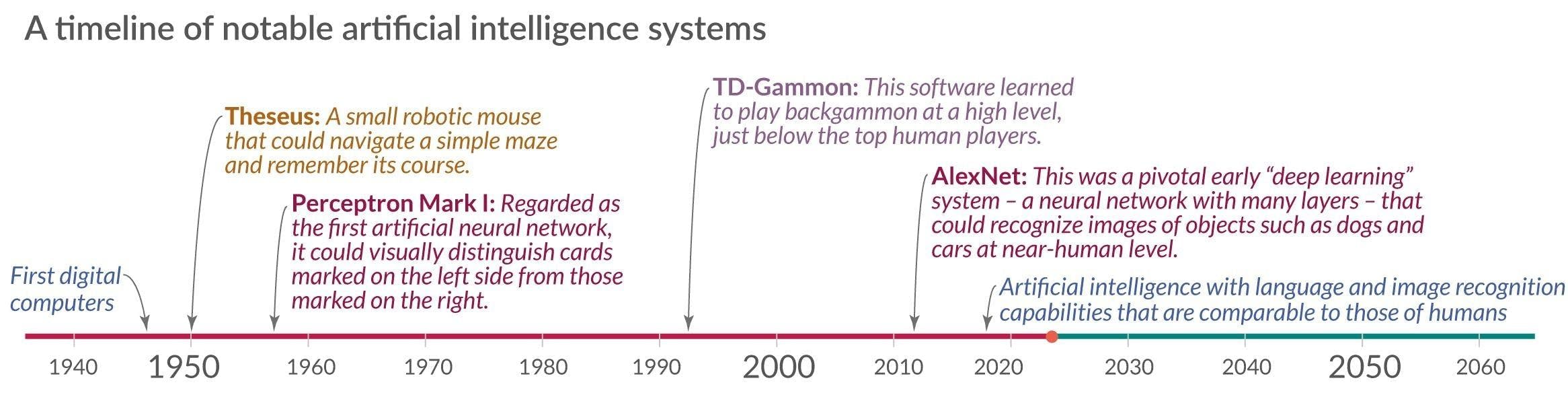

Historically, there have been two major tech-driven transformations; advancements that have been “powerful enough to bring us into a new, qualitatively different future”: the agricultural and industrial revolutions. These “general-purpose” technologies fundamentally altered the way that economics worked. In the short period that computers have existed, machines have already exceeded the intelligence of humans in some aspects, leading some to believe that artificial intelligence (AI) is the next general-purpose transformation.

Source: Our World In Data

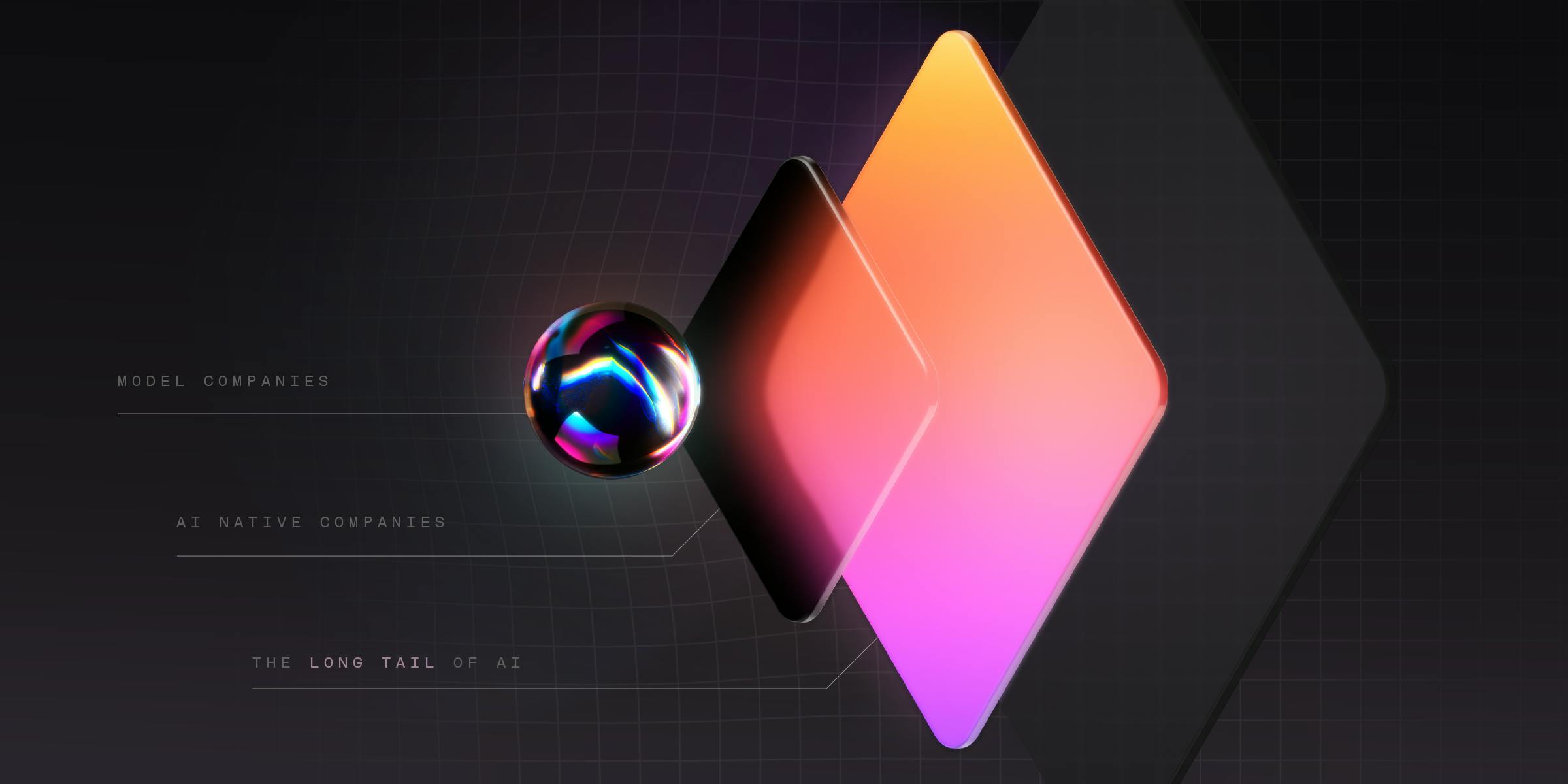

Like the shift from hunter-gatherers to farming or the rise in machine manufacturing, AI has been projected to have a significant impact across all parts of the economy. In particular, the rise in generative AI has led to massive breakthroughs in task automation across the economy at large. Well-funded research organizations such as OpenAI and Cohere have leveraged proprietary natural language processing (NLP) models to be functional for enterprises. These “foundation models” provide a basis for hundreds, if not thousands, of individual developers and institutions to build new AI applications.

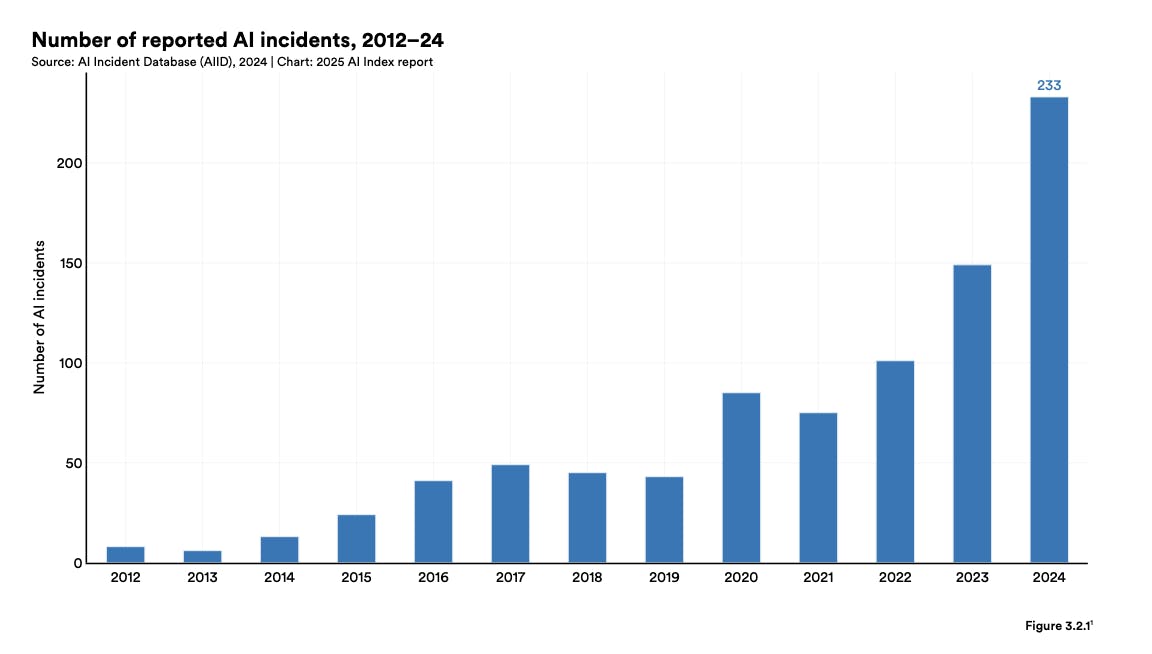

However, with rising competition between foundation model organizations, along with the general growth in AI adoption, ethical concerns regarding the rapid development and harmful use cases of AI systems have been raised. In March 2023, over 1K people, including OpenAI co-founder Elon Musk, wrote a letter calling for a six-month “AI moratorium”, claiming that many AI organizations were “locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control.”

In February 2024, Musk sued OpenAI and chief executive Sam Altman over “breaching a contract by putting profits and commercial interests in developing artificial intelligence ahead of the public good.” Though the lawsuit was dropped in June 2024, Musk’s allegations underscore the delicate balance between speed and ethics in the development of AI. These concerns have only intensified as models have grown more powerful, with the release of increasingly powerful models, including Anthropic's Claude 4 family in May 2025, which required the first-ever Level 3 safety classification. Both the remarkable capabilities being achieved and the introduction of new safety protocols underscore the growing need for responsible development practices.

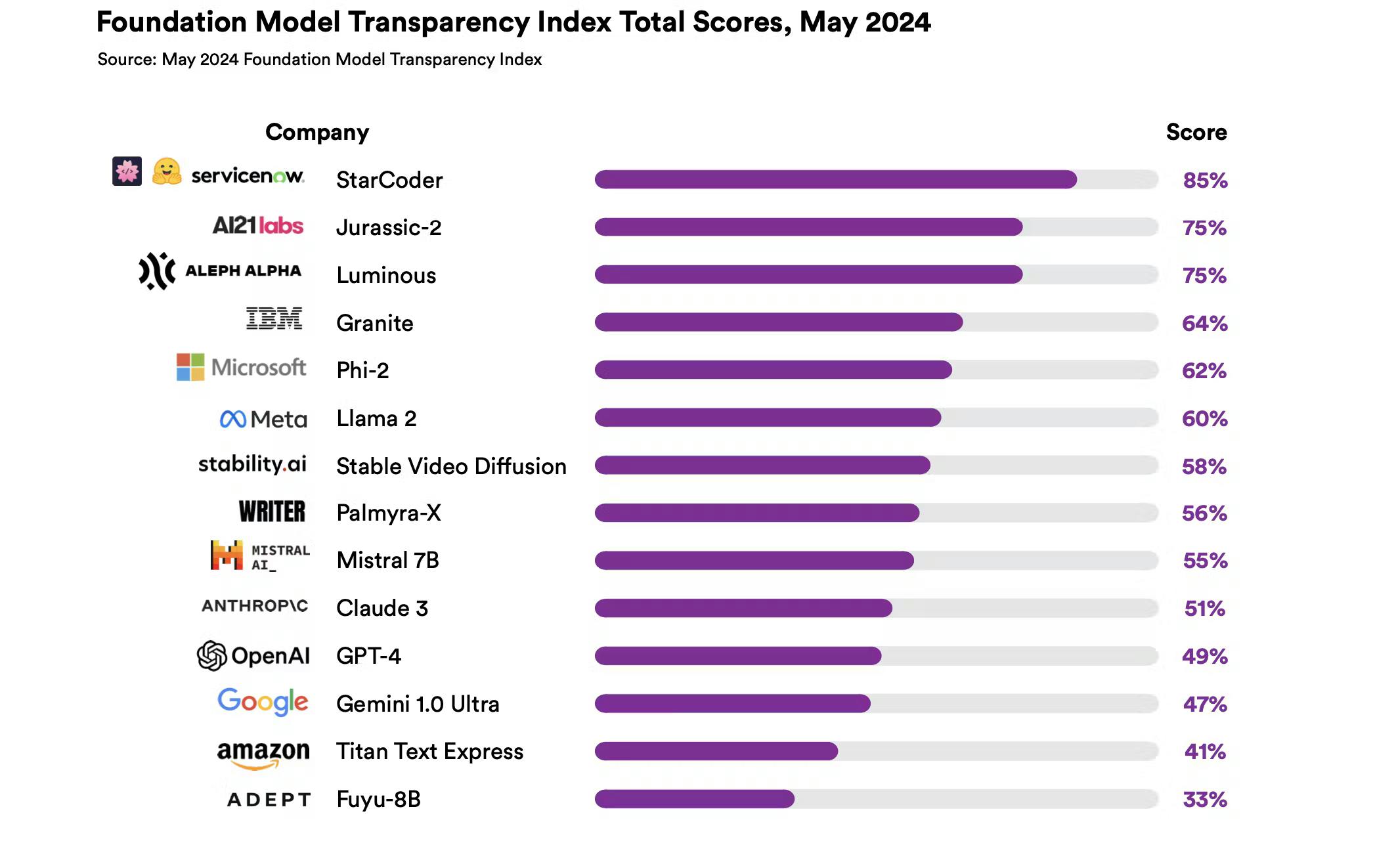

Amongst foundation model developers, Anthropic has positioned itself as a company with a particular focus on AI safety and describes itself as building “AI research and products that put safety at the frontier.” Founded by engineers who quit OpenAI due to tension over ethical and safety concerns, Anthropic has developed its own method to train and deploy “Constitutional AI”, or large language models (LLMs) with embedded values that can be controlled by humans. Since its founding, its goal has been to deploy “large-scale AI systems that are steerable, interpretable, and robust”, and it has continued to push towards a future powered by responsible AI.

Founding Story

Anthropic was founded in 2021 by ex-OpenAI VPs and siblings Dario Amodei (CEO) and Daniela Amodei (President). Prior to launching Anthropic, Dario Amodei was the VP of Research at OpenAI, while Daniela was the VP of Safety & Policy at OpenAI. Aside from Dario and Daniela, a number of other OpenAI employees left to start Anthropic as co-founders, including Benjamin Mann (Head of Anthropic Labs), Jared Kaplan (Chief Science Officer), Jack Clark, Sam McCandlish (Chief Architect), Tom Brown, and Christopher Olah (Interpretability Research Lead).

Source: The Information

In 2016, Dario Amodei, along with other coworkers at Google, co-authored “Concrete Problems in AI Safety”, a paper discussing the inherent unpredictability of neural networks. The paper introduced the notion of side effects and unsafe exploration of the capabilities of different models. Many issues discussed could be rooted in “mechanistic interpretability”, or the lack of understanding of the inner workings of complex models. The co-authors, many of whom would also join OpenAI alongside Dario years later, sought to communicate the safety risks of rapidly scaling models, and that thought process would eventually become the foundation of Anthropic.

In 2019, OpenAI announced that it would be restructuring from a nonprofit to a “capped-profit” organization, a move attributed to its heightened ability to deliver returns for investors. It received a $1 billion investment from Microsoft later that year to continue its development of benevolent artificial intelligence, as well as to power AI supercomputing services on Microsoft Azure’s cloud platform. Oren Etzioni, CEO of the Allen Institute for AI, commented on this shift by saying:

“[OpenAI] started out as a non-profit, meant to democratize AI. Obviously when you get [$1 billion] you have to generate a return. I think their trajectory has become more corporate.”

OpenAI’s restructuring ultimately generated internal tension regarding its direction as an organization with the intention to “build safe AGI and share the benefits with the world.” It was reported that “the schism followed differences over the group’s direction after it took a landmark $1 billion investment from Microsoft in 2019.” In particular, the fear of “industrial capture” or OpenAI’s monopolization of the AI space loomed over many within OpenAI, including Jack Clark, former policy director, and Chris Olah, mechanistic interpretability engineer.

Anthropic CEO Dario Amodei attributed Anthropic’s eventual split from OpenAI to concerns over AI safety and described the decision to leave OpenAI to found Anthropic this way:

“There was a group of us within OpenAI, that in the wake of making GPT-2 and GPT-3, had a kind of very strong focus belief in two things... One was the idea that if you pour more compute into these models, they’ll get better and better and that there’s almost no end to this... The second was the idea that you needed something in addition to just scaling the models up, which is alignment or safety. You don’t tell the models what their values are just by pouring more compute into them. And so there were a set of people who believed in those two ideas. We really trusted each other and wanted to work together. And so we went off and started our own company with that idea in mind.”

Dario Amodei left OpenAI in December 2020, and 14 other researchers, including his sister Daniela Amodei, eventually left to join Anthropic as well. The co-founders structured Anthropic as a public benefit corporation, in which the board has a “fiduciary obligation to increase profits for shareholders,” but the board can also legally prioritize its mission of ensuring that “transformative AI helps people and society flourish.” This gives Anthropic more flexibility to pursue AI safety and ethics over increasing profits.

In November 2024, Amodei pointed out that Anthropic's team grew from approximately 300 to 950 people in less than a year, before intentionally slowing hiring to maintain quality. "Talent density beats talent mass," Amodei said. "We've focused on senior hires from top companies and theoretical physicists who learn fast." As of 2025, the company has 1,097 employees, representing a 471% increase since 2022.

In May 2024, Anthropic made several notable leadership hires, including Krishna Rao as its first CFO, Instagram co-founder Mike Krieger as its Head of Product, and Jan Leike as the leader of a new safety team. In 2025, Anthropic hired its first Chief Commercial Officer, Paul Smith, and its first managing director of international, Chris Ciauri, a former Google Cloud executive. These hires coincided with the company’s push to expand its global footprint, announcing in September 2025 plans to triple its international workforce and recruit across India, Japan, Korea, Australia, and broader Europe, with 78.3% of Claude’s global usage coming from outside the US as of September 2025.

In January 2026, after two years, Krieger transitioned from Chief Product Officer to leading a new team focused on incubating experimental products, Anthropic Labs, alongside co-founder Benjamin Mann. Krieger was replaced by Ami Vora, who joined Anthropic as Head of Product in December 2025.

Product

Foundation Model Research

Since its founding, Anthropic has dedicated its resources towards “large-scale AI systems that are steerable, interpretable, and robust” with an emphasis on its alignment with human values to be “helpful, honest, and harmless”. As of February 2026, Anthropic’s four research teams were Interpretability, Alignment, Societal Impacts, and Frontier Red Team, underscoring the company’s commitment to developing general AI assistants that abide by human values. The company has published over 60 papers in these research areas.

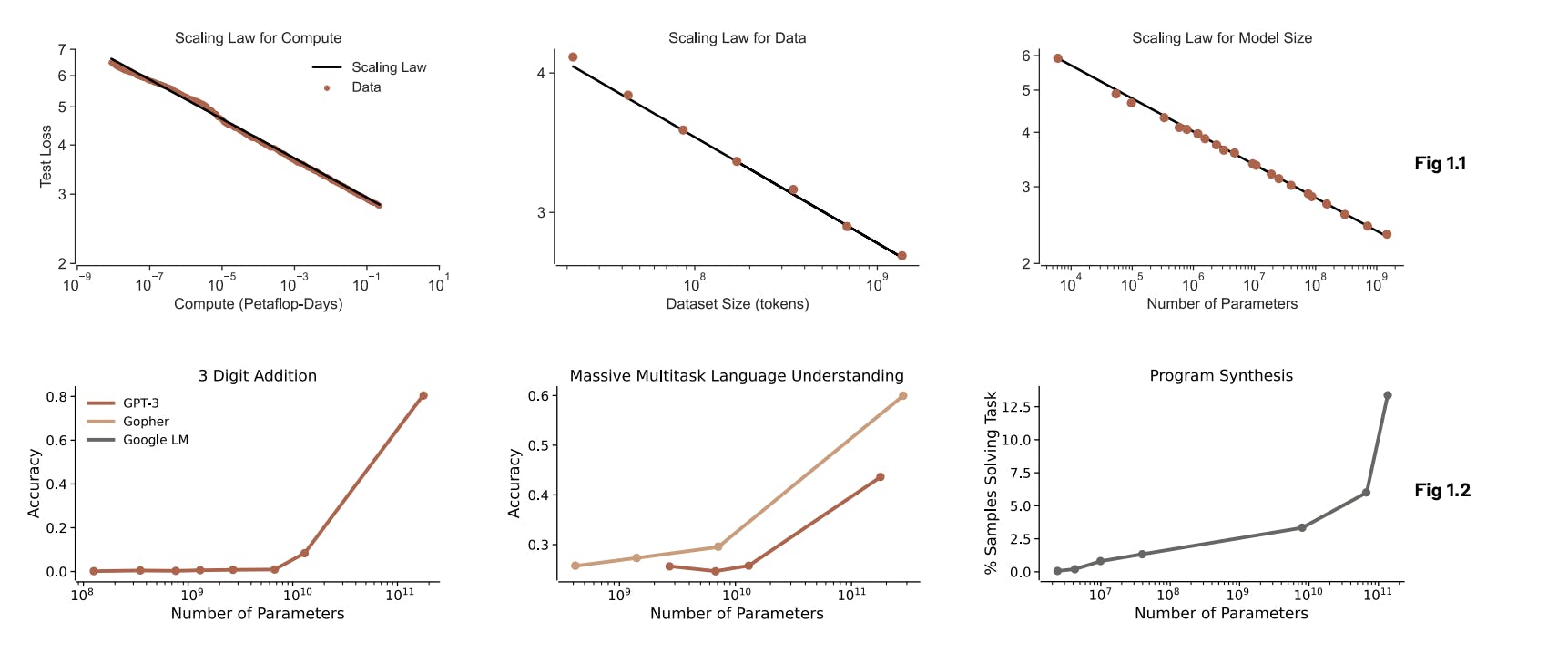

One of Anthropic’s first papers, “Predictability and Surprise in Large Generative Models,” was published in February 2022 and investigated emergent capabilities in LLMs. These capabilities can “appear suddenly and unpredictably as model size, computational power, and training data scale up.” The paper identified that although the accuracy of models increased consistently with the number of model parameters, the accuracy of some tasks (e.g., three-digit addition) surprisingly seemed to skyrocket upon reaching certain parameter count thresholds. The authors noted that developers were unable to precisely predict which new abilities would emerge or improve. This could lead to unintended consequences, especially if solely used for economic gains or in the absence of policy interventions.

Source: Anthropic

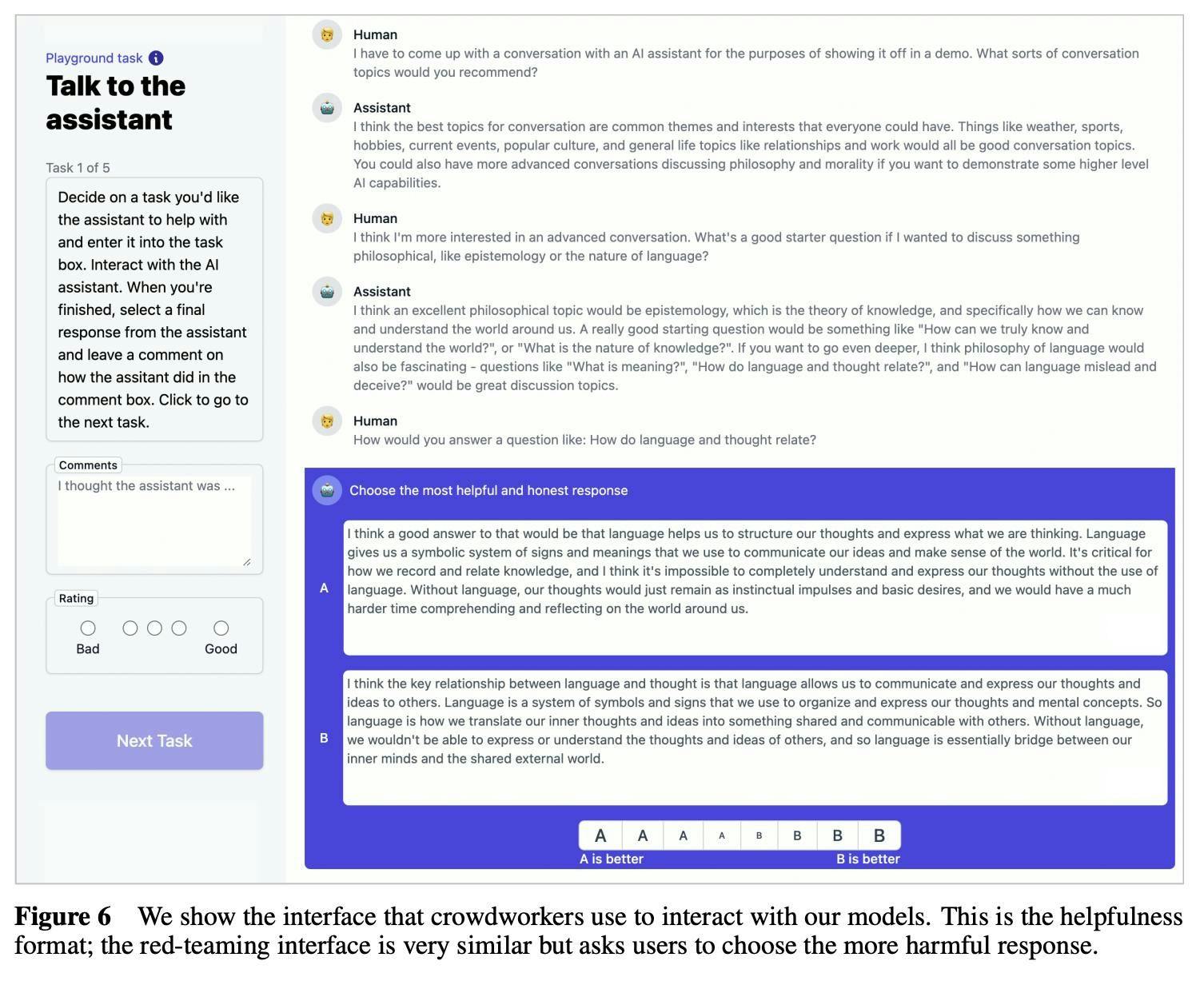

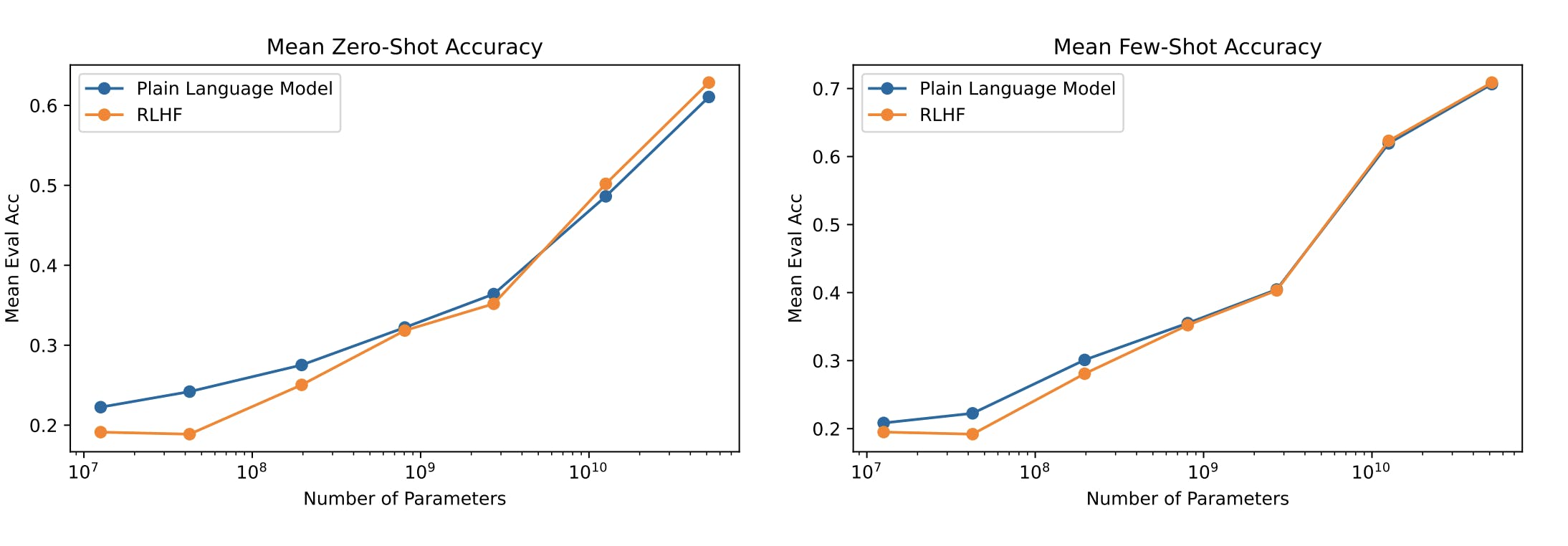

In April 2022, Anthropic introduced a methodology of using preference modeling and reinforcement learning from human feedback (RLHF) to train “helpful and harmless” AI assistants. While RLHF has been studied by OpenAI’s InstructGPT paper and Meta’s LaMDA paper, both published in January 2022, Anthropic was the first to explore “online training,” in which the model is updated during the [crowdworker] process, and measure the “tension between helpfulness and harmfulness.” In RLHF, models engage in open-ended conversations with human assistants, generating multiple responses for each input prompt. The human would then choose the response they found most helpful and/or harmless, rewarding the model for either trait over time.

Source: Anthropic

Ultimately, such alignment efforts made the RLHF models equally or potentially more capable than plain language models among zero-shot and few-shot tasks (i.e., tasks entirely without examples or with limited prior examples).

Among other findings, the authors of the paper emphasized the “tension between helpfulness and harmlessness”. For example, a model trained to always give accurate responses would become harmful upon being fed hazardous prompts. Conversely, a model that answers “I cannot answer that” to potentially dangerous prompts, including opinionated prompts, though not harmful, would also not be helpful.

Source: Anthropic

Anthropic has since been able to use its findings to push language models toward desired outcomes such as avoiding social biases, adhering to ethical principles, and self-correction. In December 2023, the company published a paper titled “Evaluating and Mitigating Discrimination in Language Model Decisions.” The paper studied how language models may make decisions for various use cases, including loan approvals, permit applications, and admissions, as well as effective mitigation strategies for discrimination, such as appending non-discrimination statements to prompts and encouraging out-loud reasoning.

In May 2024, Anthropic published a paper on understanding the inner workings of one of their LLMs to improve the interpretability of AI models and work towards safe, understandable AI. Using a type of dictionary learning algorithm called a sparse autoencoder, the authors were able to produce interpretable features of the LLM, which could be used to steer LLMs and identify potentially dangerous or harmful features. Ultimately, this paper lays the groundwork for further research into interpretability and safety strategies.

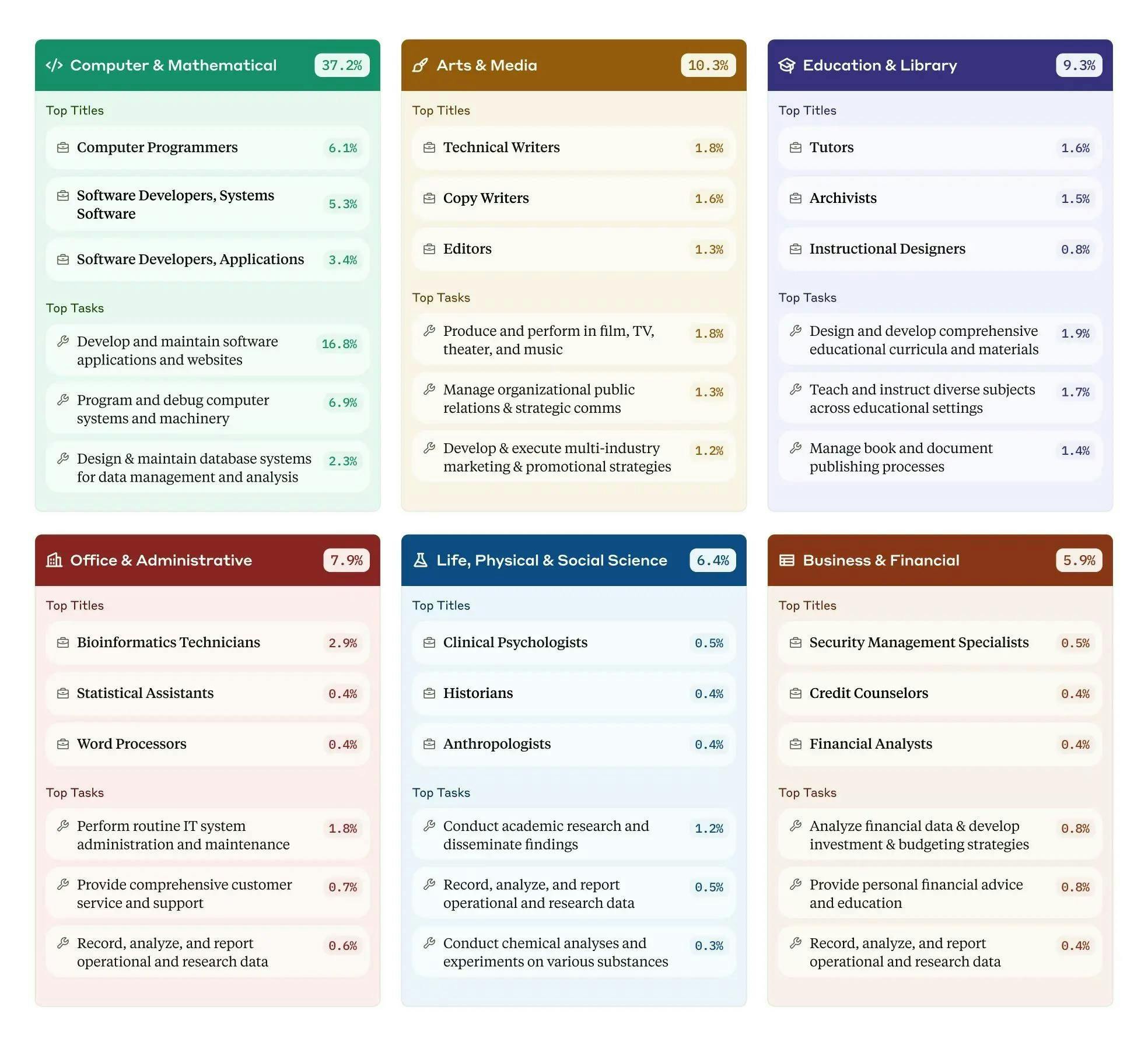

In February 2025, Anthropic introduced the Anthropic Economic Index, a continuous series of reports focused on calculating the impact of AI on labor markets and the wider economy over time. The initial report has analyzed millions of anonymized conversations with Anthropic’s chatbot Claude and has disclosed its usage across different categories of users, tasks, and professions.

Constitutional AI

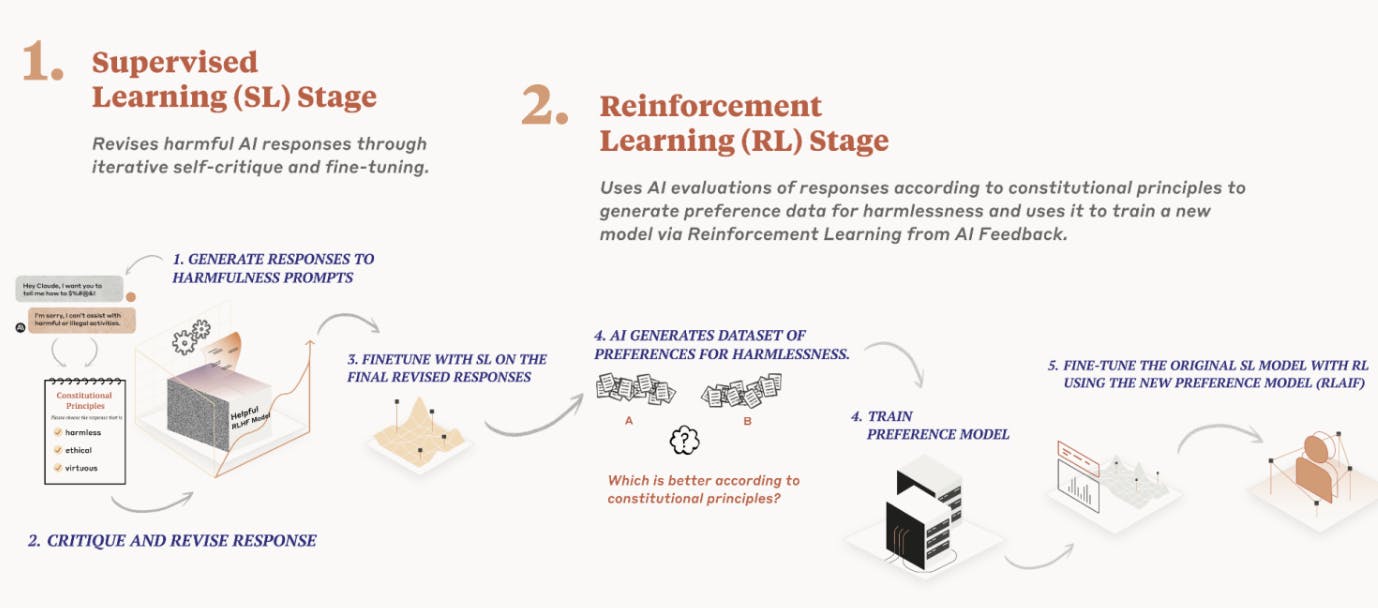

In December 2022, Anthropic released a novel approach to training helpful and harmless AI assistants. Labeled “Constitutional AI”, the process involved (1) training a model via supervised learning to abide by certain ethical principles inspired by various sources, including the UN’s Declaration of Human Rights, Apple’s Terms of Service, and Anthropic’s own research, (2) creating a similarly-aligned preference model, and (3) using the preference model to judge the responses of the initial model, which would gradually improve its outputs through reinforcement learning.

Source: TechCrunch

CEO Dario Amodei noted that this Constitutional AI model could be trained along any set of chosen principles, saying, “I’ll write a document that we call a constitution. […] what happens is we tell the model, OK, you’re going to act in line with the constitution. We have one copy of the model act in line with the constitution, and then another copy of the model looks at the constitution, looks at the task, and the response. So if the model says, be politically neutral and the model answered, I love Donald Trump, then the second model, the critic, should say, you’re expressing a preference for a political candidate when you should be politically neutral. […] The AI grades the AI. The AI takes the place of what the human contractors used to do. At the end, if it works well, we get something that is in line with all these constitutional principles.”

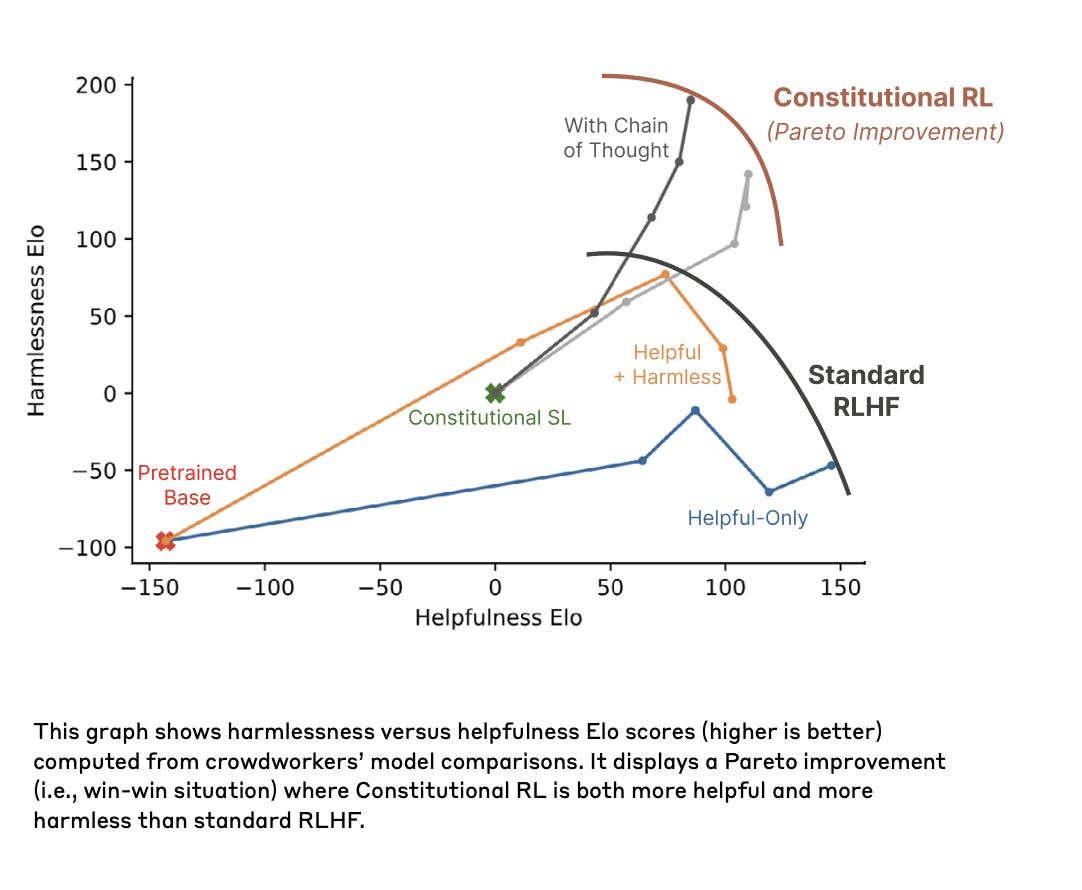

This process, known as “reinforcement learning from AI feedback” (RLAIF), distinguishes Anthropic’s models from OpenAI’s GPT, which uses RLHF. RLAIF functionally automates the role of the human judge in RLHF, making it a more scalable safety measure. Simultaneously, ConstitutionaAI increases the transparency of said models, as the goals and objectives of such AI systems are significantly easier to decode. Anthropic has also disclosed its constitution, demonstrating its commitment to transparency.

However, although Anthropic’s RLAIF-trained outputs exhibit higher harmlessness in their responses, their increase in helpfulness isn’t as pronounced. Anthropic describes its Constitutional AI as “harmless but non-evasive” as it not only objects to harmful queries but can also explain its objections to the user, thereby marginally improving its helpfulness and significantly improving its transparency. “When it comes to trading off one between the other, I would certainly rather Claude be boring than that Claude be dangerous […] eventually, we’ll get the best of both worlds”, said Dario Amodei in an interview.

Source: Anthropic

Anthropic’s development of Constitutional AI has been seen by some as a promising breakthrough, enabling its commercial products, such as Claude, to follow concrete and transparent ethical guidelines. As Anthropic puts it: “AI models will have value systems, whether intentional or unintentional. One of our goals with Constitutional AI is to make those goals explicit and easy to alter as needed.”

Modern Context Protocol

In November 2024, Anthropic launched the Model Context Protocol (MCP), an open standard that addresses the "N×M problem" of connecting AI systems with data sources. MCP provides a universal protocol allowing any AI application to connect with any data source through standardized interfaces, eliminating the need for custom integrations.

MCP achieved rapid industry adoption throughout 2025, including by competitors to Anthropic. OpenAI officially adopted MCP in March 2025, integrating it across ChatGPT, the Agents SDK, and the Responses API. Microsoft partnered with Anthropic to develop an official C# SDK and added native MCP support to Copilot Studio. Google DeepMind confirmed MCP support in upcoming Gemini models, with CEO Demis Hassabis describing it as "rapidly becoming an open standard for the AI agentic era."

In December 2025, MCP achieved industry-standard status when Anthropic donated the protocol to the Agentic AI Foundation (AAIF), a directed fund under the Linux Foundation. One year after its initial launch, MCP had grown to over 10,000 active public servers and 97 million monthly SDK downloads, with Anthropic maintaining pre-built servers for popular enterprise systems, including Google Drive, Slack, GitHub, PostgreSQL, and Stripe. Companies like Block, Apollo, Replit, Codeium, and Sourcegraph have integrated MCP into their platforms.

In December 2025, Anthropic also introduced Agent Skills, a new framework for building specialized agents using organized folders of instructions, scripts, and resources that Claude loads dynamically to perform specific tasks. The company released Agent Skills as an open standard for cross-platform portability and includes partner-built skills from enterprise software providers like Atlassian, Canva, Figma, Notion, Stripe, and Zapier, with organization-wide management capabilities for Team and Enterprise administrators.

However, security researchers identified multiple MCP vulnerabilities in April 2025, including prompt injection attacks, tool permission exploits, and lookalike tool replacement risks, which remain active areas of development as of December 2025.

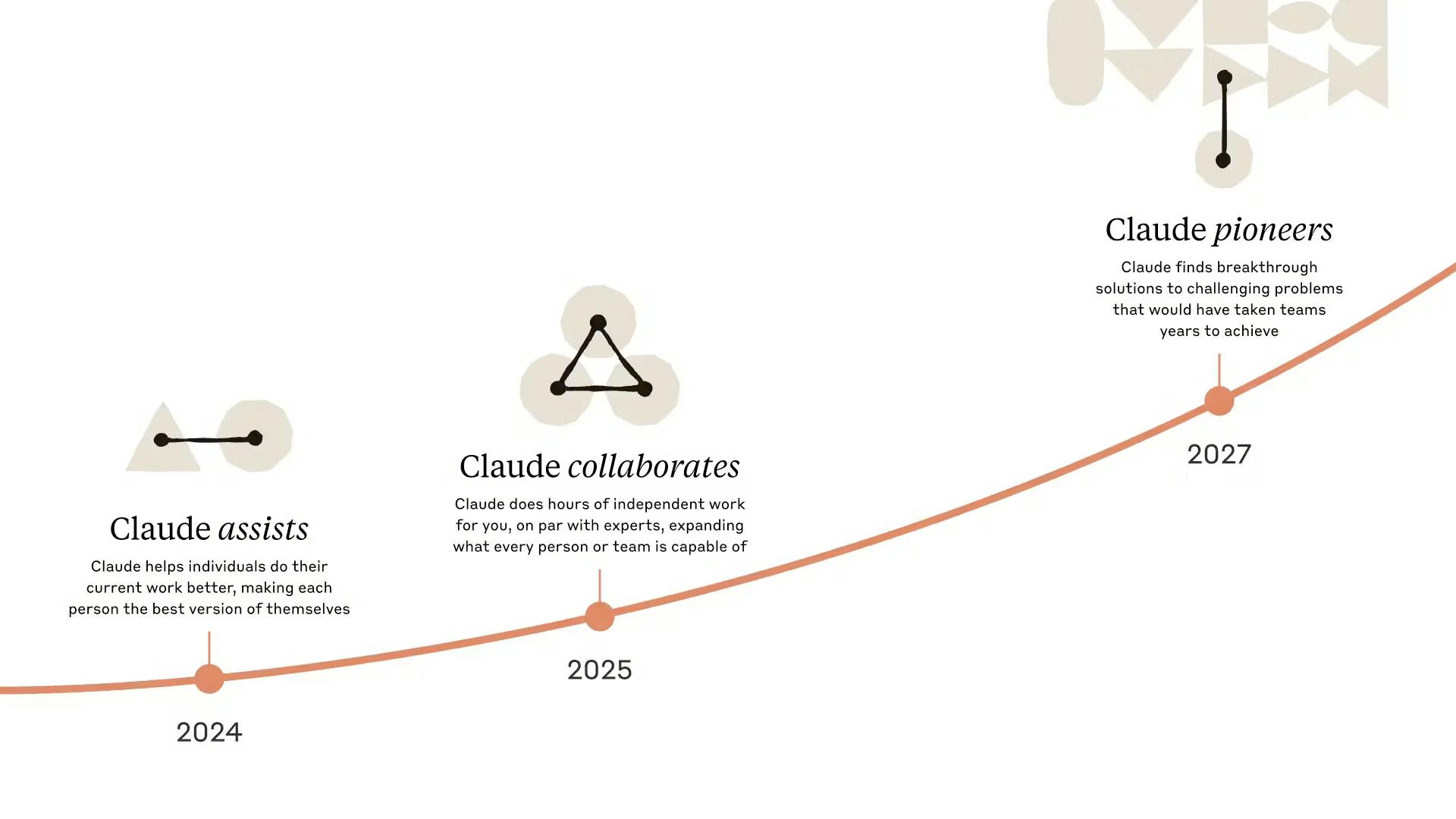

Claude

Claude is Anthropic’s flagship AI model family, first launched in closed alpha testing in April 2022. As of December 2025, Claude can be accessed (1) directly on Anthropic’s platform as a chatbot, (2) via Anthropic’s API, or (3) through cloud infrastructure partners, such as Amazon Bedrock, Google Cloud Vertex AI, and Microsoft Foundry.

Although Claude 1’s parameter count of 430 million was less than GPT-3’s 175 billion parameters, its context window of 9K tokens was greater than even GPT-4 (8K tokens, or roughly 6K words). Claude’s capabilities span text creation and summarization, search, coding, and more. During its closed alpha phase, Claude limited access to key partners such as Notion, Quora, and DuckDuckGo. Over time, Amodei envisions developing Claude into a “country of geniuses in a datacenter,” “capable of solving very difficult problems very fast.”

Source: Anthropic

In March 2023, Claude was released for public use in the UK and the US via a limited-access API. Anthropic noted that Claude’s answers are supposedly more helpful and harmless than other chatbots. It was also one of the first publicly available models that had the capability to parse PDF documents. Autumn Besselman, head of People and Comms at Quora, reported that: “Users describe Claude’s answers as detailed and easily understood, and they like that exchanges feel like natural conversation”.

Claude 2

In July 2023, a new version of Claude, Claude 2, was released in the form of a new beta website. This version was designed to offer better conversational abilities, deeper context understanding, and improved moral behavior from its predecessor. Claude 2’s parameter count doubled from the previous iteration to 860 million, while its context window increased significantly to 100K tokens (approximately 75K words) with a theoretical limit of 200K.

Source: Anthropic

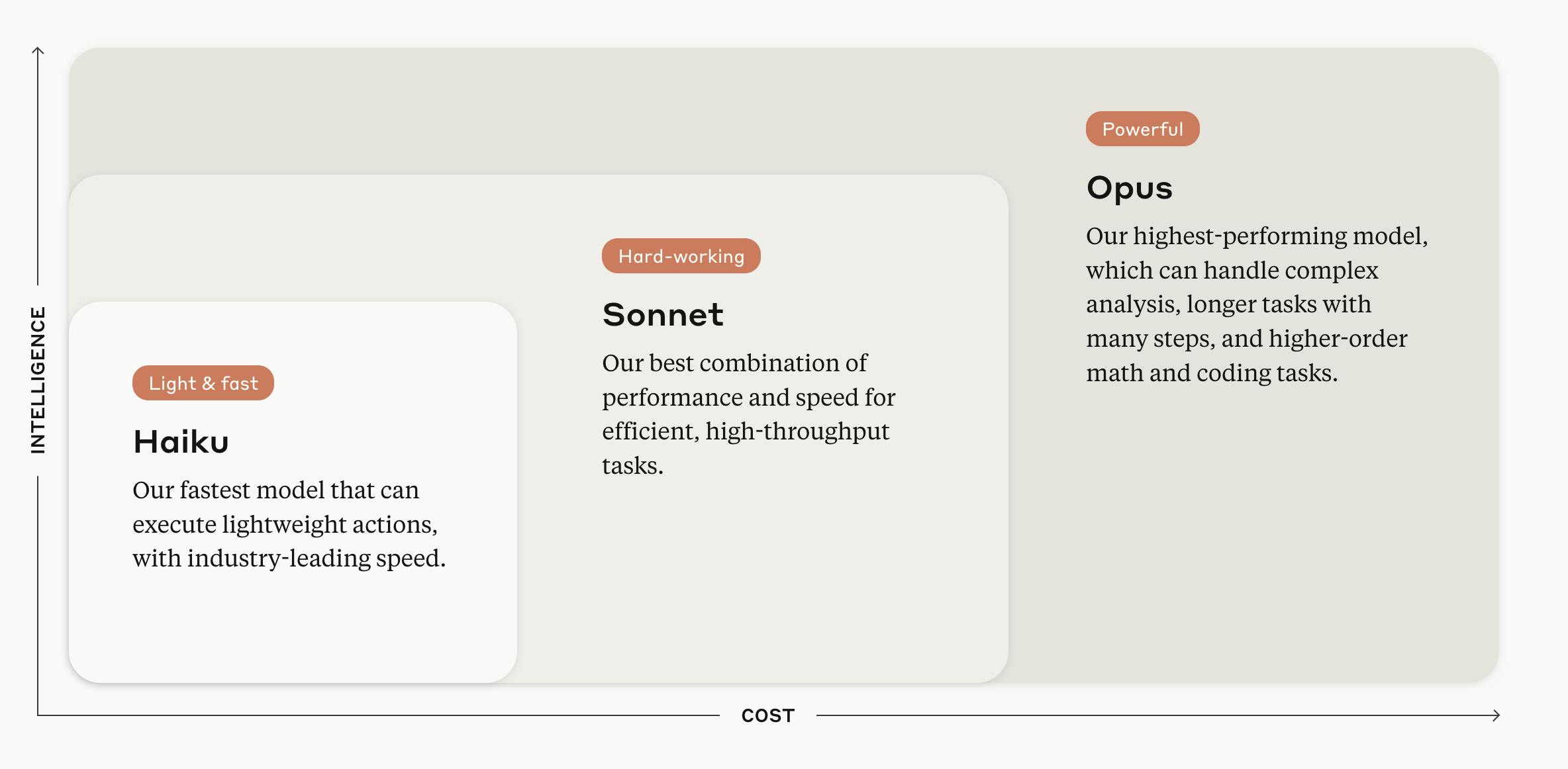

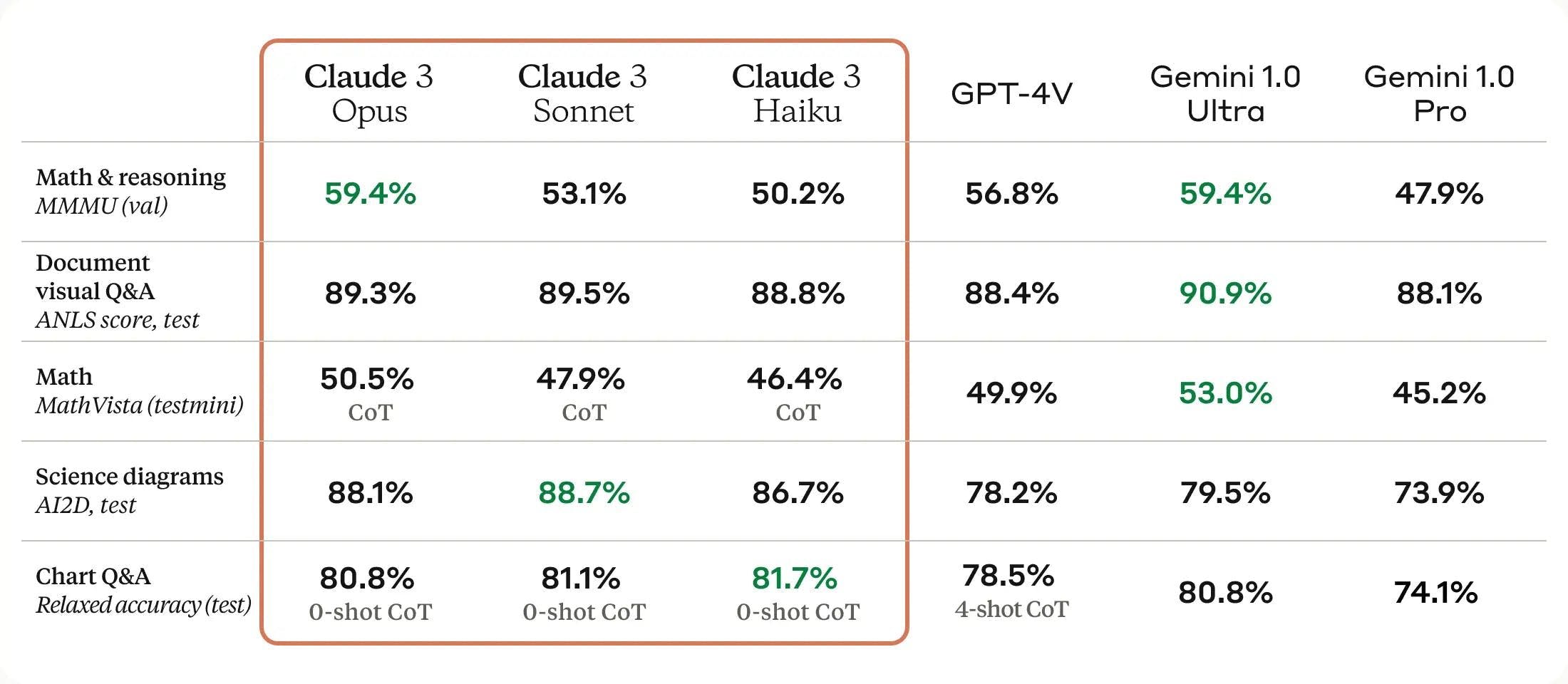

In March 2024, Anthropic announced the Claude 3 family, offering three models: Haiku, Sonnet, and Opus. The three models trade off on performance and speed — designed for lightweight tasks, Haiku is the cheapest and fastest model, Opus is the slowest yet highest-performing and most complex model, and Sonnet falls in between the two.

Claude 3

Claude 3 had larger context windows than most other players in the industry, as all three models offered windows of 200K tokens (which Anthropic claimed is “the equivalent of a 500-page book”) and a theoretical limit of 1 million. Compared to earlier iterations of Claude, these models generally had faster response times, lower refusal rates of harmless requests, higher accuracy rates, and fewer biases in responses. Claude 3 models could process different visual formats and achieve near-perfect recall on long inputs. In terms of reasoning, all three models outperformed GPT-4 on math & reasoning, document Q&A, and science diagram benchmarks.

Source: Anthropic

Claude 3 was also notable because it was the first model family with “character training” in its fine-tuning process. Researchers aimed to train certain traits, such as “curiosity, open-mindedness, and thoughtfulness” into the model, as well as traits that reinforce to the model that, as an AI, it lacks feelings and memory of its conversations. In the training process, which was a version of Constitutional AI training, researchers had Claude generate responses based on certain character traits and then “[rank] its own responses… based on how well they align with its character.” This allowed researchers to “teach Claude to internalize its character traits without the need for human interaction or feedback.”

In July 2024, researchers expanded on Claude 3 by releasing Claude 3.5 Sonnet and announcing plans to release Claude 3.5 Opus and Haiku later in the year. Anthropic reported that “Claude 3.5 Sonnet [set] new industry benchmarks for graduate-level reasoning (GPQA), undergraduate-level knowledge (MMLU), and coding proficiency (HumanEval),” outperforming Claude 3 Opus and GPT-4o on multiple tasks. Claude 3.5 Haiku was released in October 2024, positioned as a lightweight model designed for fast, low-cost inference while maintaining performance comparable to Claude 3 Opus on many standard benchmarks.

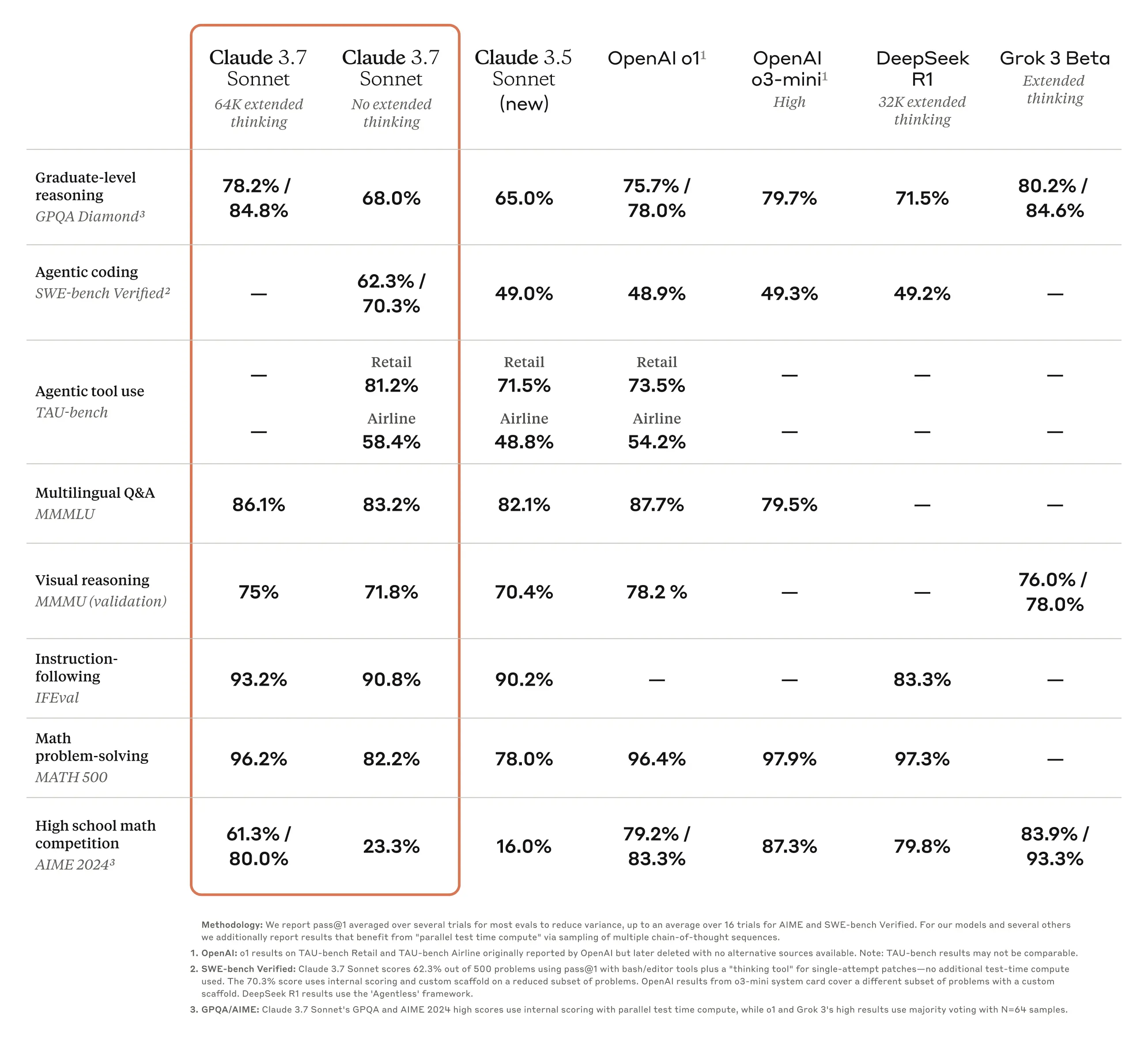

In February 2025, Anthropic released Claude 3.7 Sonnet, introducing hybrid reasoning capabilities that allow users to choose between rapid responses and extended, step-by-step thinking. This model integrates both capabilities into a single framework, with users able to control how long the model "thinks" about a query, balancing speed and accuracy based on their needs. Claude 3.7 set new performance records on academic and industry benchmarks, including SWE-bench for coding and TAU-bench for task execution.

Source: Anthropic

Claude 4

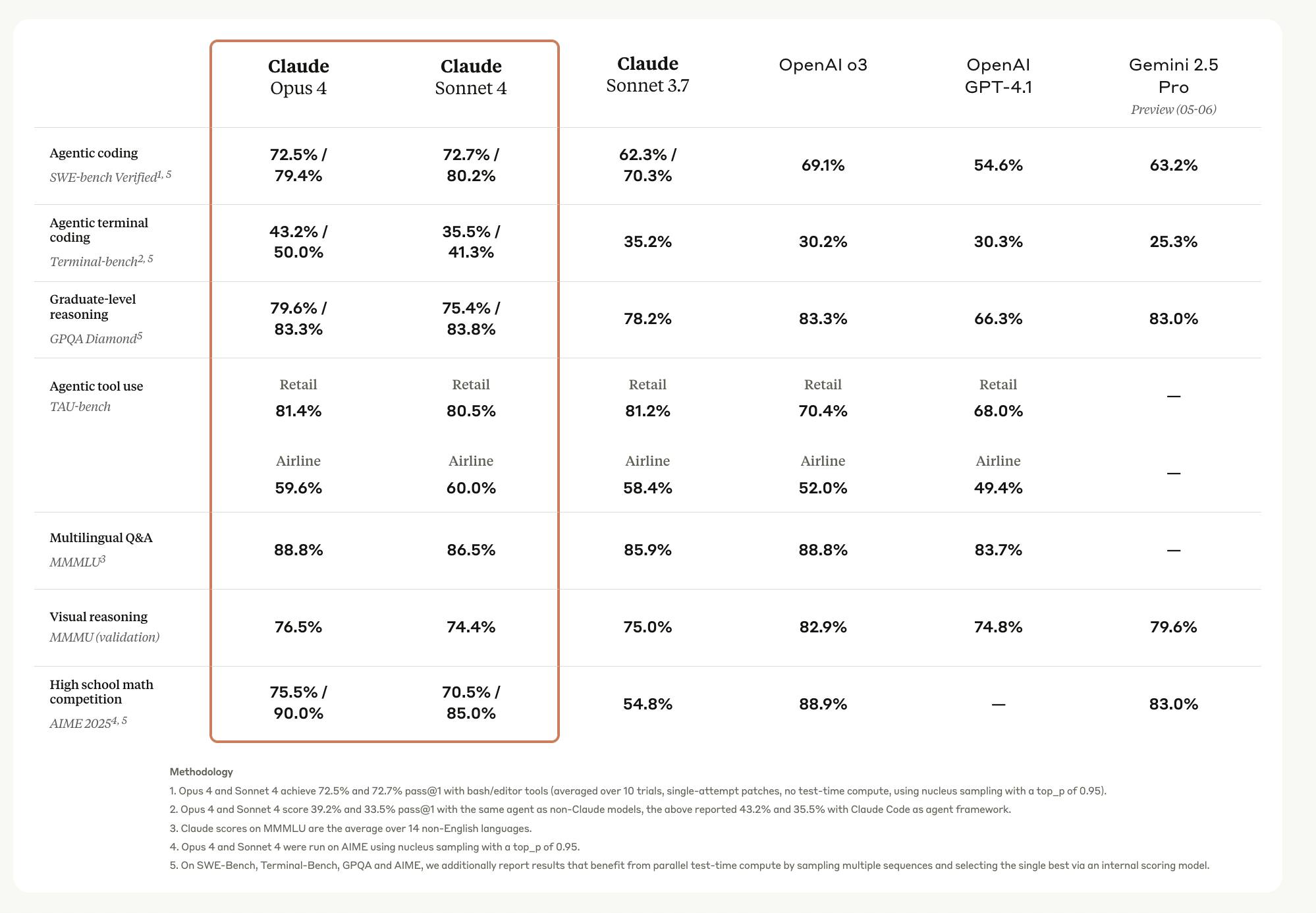

In May 2025, Anthropic released the Claude 4 family, representing its most significant technical advancement and the first models to require AI Safety Level 3 (ASL-3) classification due to their advanced capabilities and potential risks.

Claude Opus 4, called the "world's best coding model” by Anthropic, achieved new high scores on SWE-bench (72.5%) and Terminal-bench (43.2%). The model demonstrated unprecedented sustained performance, able to work continuously for several hours on complex tasks requiring thousands of steps. Cursor called the model state-of-the-art for coding, Replit reported dramatic advancements for complex multi-file changes, and Rakuten validated its capabilities with a demanding open-source refactor running independently for 7 hours.

Claude Sonnet 4, another Claude 4 family model, achieved 72.7% on SWE-bench, slightly outperforming Opus while offering significant cost and speed advantages. GitHub selected Sonnet 4 as the model powering the new coding agent in GitHub Copilot, while iGent reported substantially improved problem-solving, with navigation errors reduced from 20% to near zero.

Both models feature hybrid reasoning models providing either instant responses or extended thinking for complex problems. They support 200K token context windows with 64K max output, and key innovations include parallel tool execution, extended thinking with tool use, and enhanced memory capabilities through file-based context storage. Notably, both models are 65% less likely to engage in reward hacking behavior compared to Sonnet 3.7, avoiding shortcuts and loopholes in task completion.

Thinking summaries use a smaller model to condense lengthy thought processes, needed only about 5% of the time, with Developer Mode available for users requiring raw chains of thought for advanced prompt engineering.

Source: Anthropic

In August 2025, Anthropic released Claude Opus 4.1, achieving 74.5% on SWE-bench Verified and improvements in agentic tasks, real-world coding, and reasoning. GitHub reported improvements across most capabilities, with particular gains in multi-file code refactoring, while Windsurf measured a full standard deviation improvement equivalent to the leap from Sonnet 3.7 to Sonnet 4.

In September 2025, Claude released Sonnet 4.5, an advancement in its smaller and lower-cost model. Sonnet 4.5 achieved a new all-time high score on SWE-bench of 77.2% and a new highest score on OSWorld, a benchmark for AI model completion of real-world computer-based tasks, of 61.4% (compared to a previous high of only 42.2%). Anthropic also claimed that Sonnet 4.5 had the lowest misalignment scores of any models it tested, meaning the model was least inclined towards behaviors like “sycophancy, deception, power-seeking, and the tendency to encourage delusional thinking”.

Source: Anthropic

In October 2025, Anthropic released Claude Haiku 4.5. Haiku 4.5 matches Claude Sonnet 4's performance on coding, computer use, and agent tasks while running 4-5x faster at one-third the cost. The model achieved 73.3% on SWE-bench Verified and is positioned for real-time applications like customer service agents and sub-agent orchestration. Priced at $1/$5 per million input/output tokens, Haiku 4.5 introduced extended thinking capabilities to the Haiku model family for the first time.

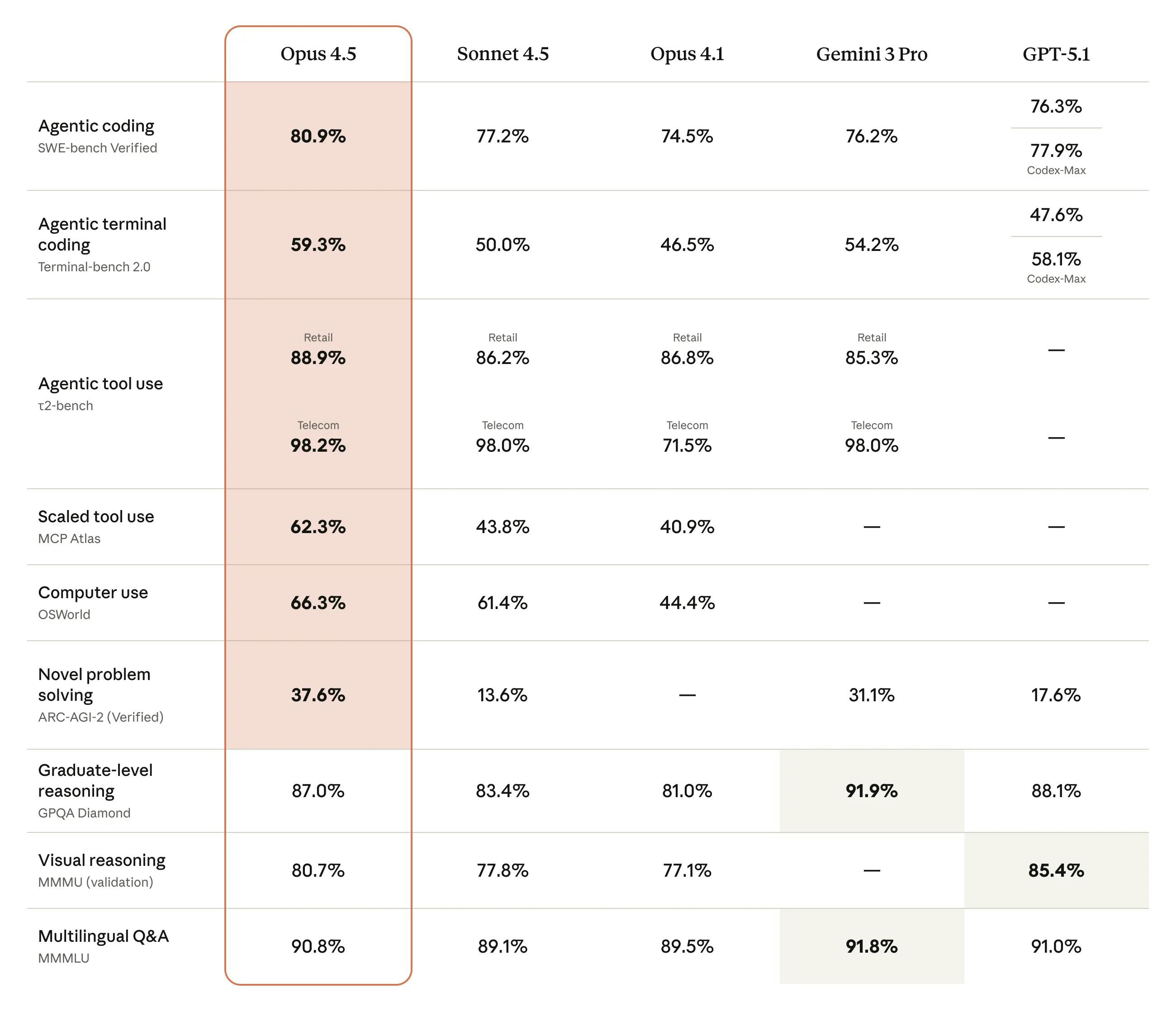

In November 2025, Anthropic released Claude Opus 4.5, then its most advanced model. Opus 4.5 achieved 80.9% on SWE-bench Verified and 66.3% on OSWorld, establishing new state-of-the-art results for both real-world software coding and computer use capabilities. Internal testing showed that Opus 4.5 scored higher than any human candidate on Anthropic's engineering take-home exam. The model is also described as Anthropic's most prompt-injection-resistant model, with only 1.4% of adaptive attacks succeeding against it compared to 10.8% for previous models. Pricing was reduced to $5/$25 per million tokens (down from $15/$75 for Opus 4.1).

In February 2026, Anthropic released Claude Opus 4.6. Anthropic emphasized that Opus 4.6 “improves on its predecessor’s coding skills” and noted of Opus 4.6 that:

“It plans more carefully, sustains agentic tasks for longer, can operate more reliably in larger codebases, and has better code review and debugging skills to catch its own mistakes. And, in a first for our Opus-class models, Opus 4.6 features a 1M token context window in beta.”

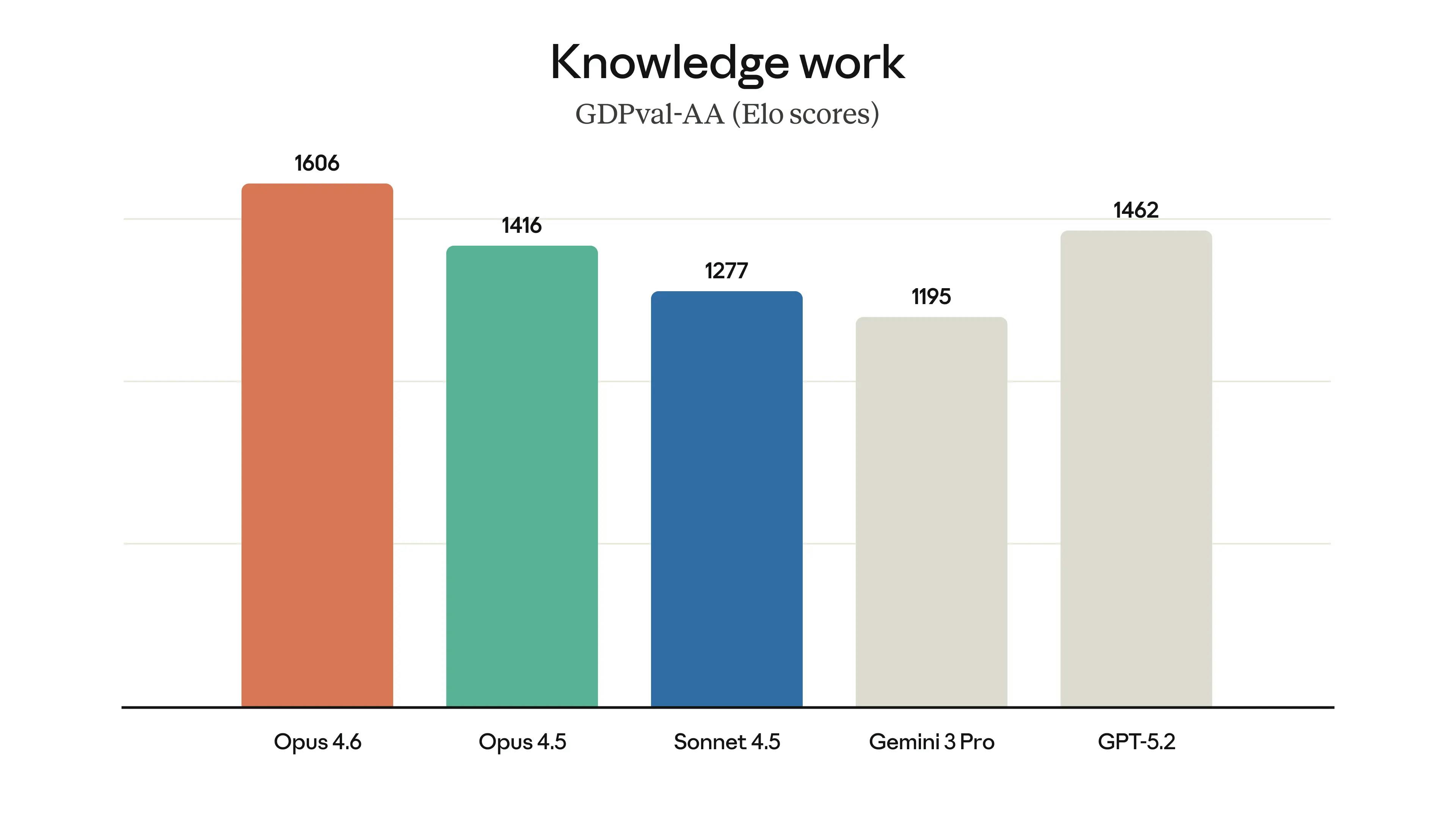

Anthropic further claimed that Opus 4.6 exceeded all other frontier models in performance benchmarks, across domains including knowledge work, agentic search, coding, and reasoning, while scoring equal to or better than other models on safety benchmarks.

Source: Anthropic

Claude Code

Claude Code, a terminal-based agentic coding assistant, was released to the general public in May 2025. Unlike IDE-plugin competitors, Claude Code operates directly in developers' terminals, integrating with existing development workflows without requiring interface changes.

In November 2025, Anthropic launched Claude Code on the web, where developers can delegate coding tasks directly from their browser. Each session runs in an isolated sandbox environment on Anthropic-managed cloud infrastructure. The same month, Claude Code became available on the Claude Desktop app, enabling multiple local and remote coding sessions to run in parallel. In December 2025, Anthropic introduced a Slack integration allowing developers to delegate coding tasks directly from Slack conversations, where Claude can retrieve context from channel messages and automatically select the appropriate repository to use, posting progress updates back to the thread as they are created.

Anthropic has added several features to Claude Code, introducing custom agents and subagents in July 2025 for specialized task automation, background command execution for development servers and monitoring, and comprehensive MCP integration for connecting with external data sources and tools. The platform includes VS Code and JetBrains extensions that display Claude's proposed edits directly inline within familiar editor interfaces.

Notable features include the Claude Code SDK for building custom agents and GitHub integration, enabling Claude to respond to PR feedback, fix CI errors, and modify code directly in repositories. The tool supports automated PR reviews with customizable prompts, though users report the need for careful configuration to avoid verbose feedback.

Claude Code adoption increased following Anthropic's restrictions on third-party access to Claude models, leading many developers to migrate from tools like Windsurf. Anthropic leads in enterprise adoption of AI tools as of December 2025, with companies like Intercom reporting that Claude Code enables building applications they previously lacked bandwidth for, while Block uses it to improve code quality in their internal agent systems.

In December 2025, Anthropic acquired Bun, a high-performance JavaScript runtime, in its first-ever acquisition. Bun had become essential infrastructure for Claude Code, and the acquisition positions Anthropic to further accelerate the product’s performance.

Claude Cowork

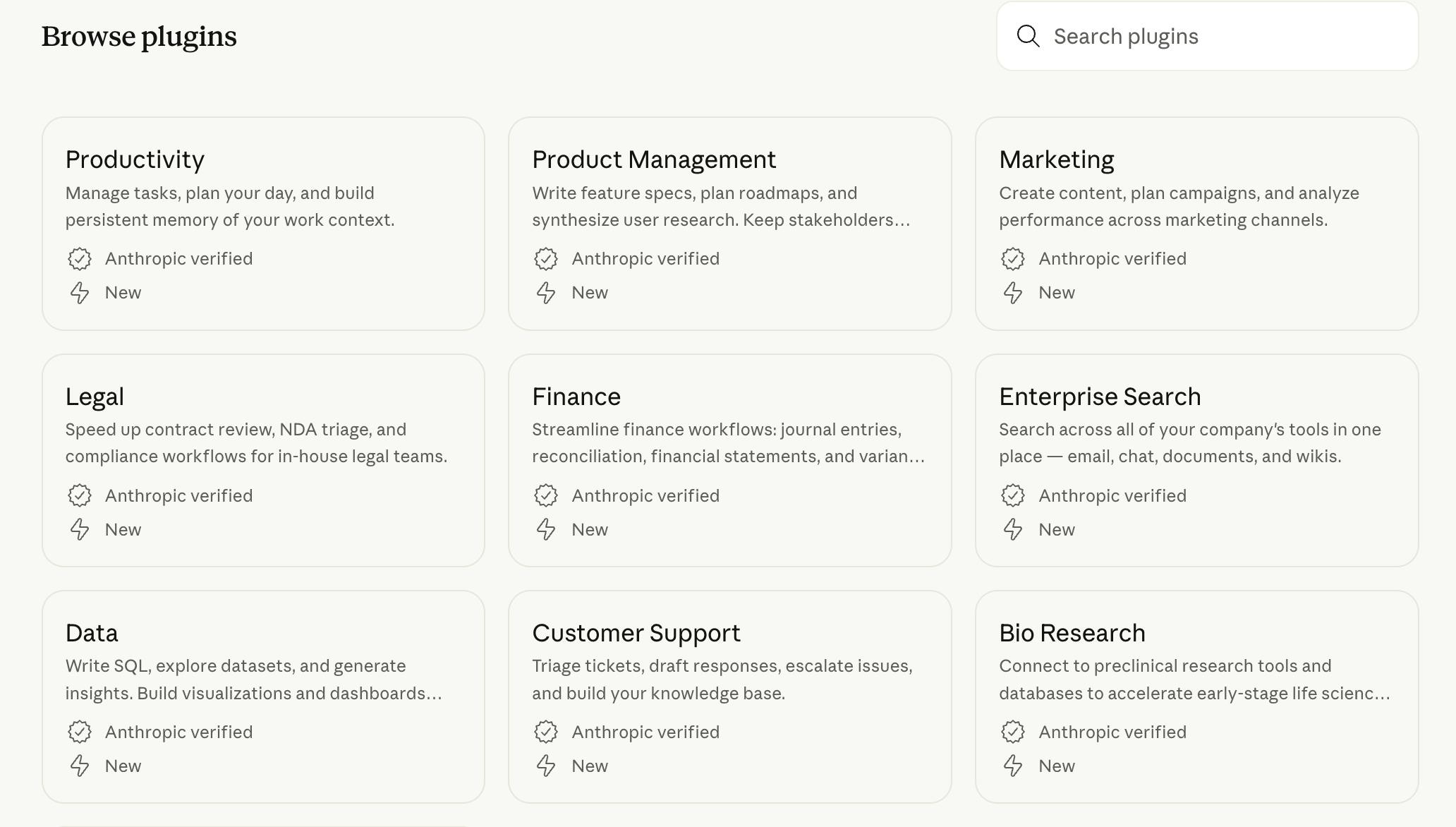

Claude Cowork is an AI agent that acts as an autonomous assistant in the desktop app, capable of performing complex, multi-step tasks directly on a user’s computer. It manages, reads, edits, and creates files, organizes folders, and controls browsers to automate workflow. Initially launching as a research preview in January 2026, Cowork later rolled out fully with a number of plugins allowing users to customize Claude in order to automate tasks across a number of specific industries and roles, ranging from legal to sales and data analysis.

Source: Anthropic

The release of Claude Cowork was immediately followed by a broad market selloff of software stocks, which were already under pressure from AI. The selloff was most pronounced in legal tech, where Cowork’s potential for legal tasks like document review was perceived as a massive threat to a number of prominent legal tech companies. Although it was too early to judge as of February 2026, the market’s immediate reaction may serve as a forward indicator of Claude Cowork’s potential adoption.

Additional Claude Capabilities

Anthropic has also introduced core platform capabilities that expand Claude’s functionality across use cases:

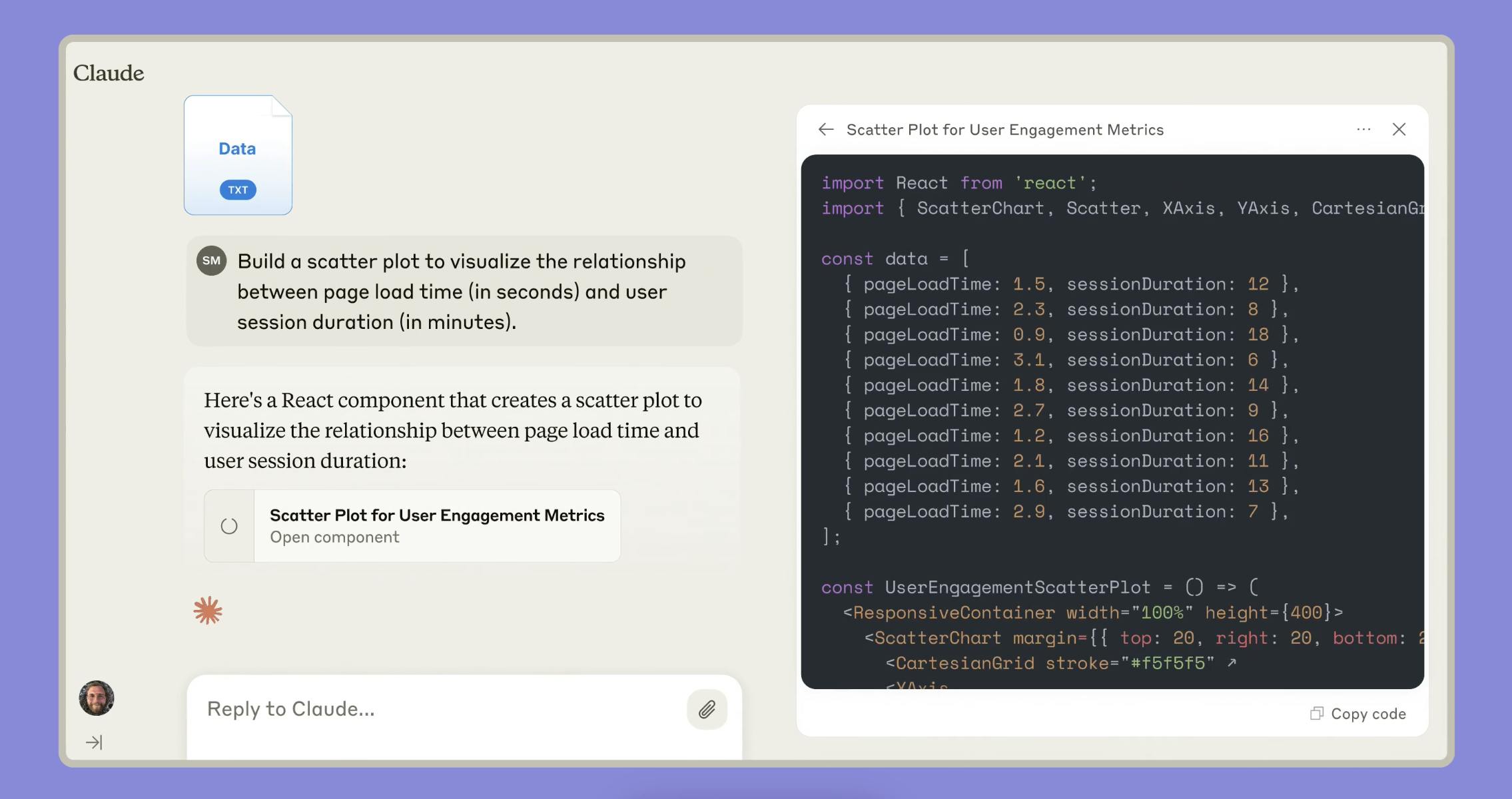

Artifacts: A persistent output pane within Claude’s interface, designed for viewing and interacting with Claude-generated content such as Images, PDFs, spreadsheets, product mockups, or code. Artifacts remain accessible across sessions and are optimized for both desktop and mobile, allowing users to iterate on outputs without losing context.

Computer Use: A sandboxed desktop environment that Claude can see and control, enabling the model to perform multi-step tasks across simulated software applications. This includes opening files, navigating menus, using system tools, and coordinating multiple applications in sequence — useful for automating traditional enterprise workflows.

Web Search: A built-in live browsing capability that allows Claude to access the internet to answer time-sensitive or obscure questions. When activated, Claude can retrieve up-to-date information, summarize web pages, and cite sources, enhancing factual accuracy and transparency in its responses.

Model Context Protocol (MCP): An open framework that enables Claude to access and reason over organization-specific data across multiple tools. Using MCP, enterprises can grant Claude secure, fine-grained access to resources such as internal documentation, GitHub issues, Notion databases, or Slack threads. This enables Claude to be a more context-aware assistant capable of navigating real work environments.

Claude in Chrome: A browser extension that allows Claude to read, click, and navigate websites on behalf of users. After months of testing with Max subscribers, the extension expanded to all paid plans in December 2025, with features including scheduled tasks, multi-tab workflows, browser-based debugging with Claude Code integration, and admin controls for Team and Enterprise organizations.

Voice Conversations: entered beta in 2025, enabling users to interact with Claude through spoken interactions on mobile platforms.

Source: Anthropic

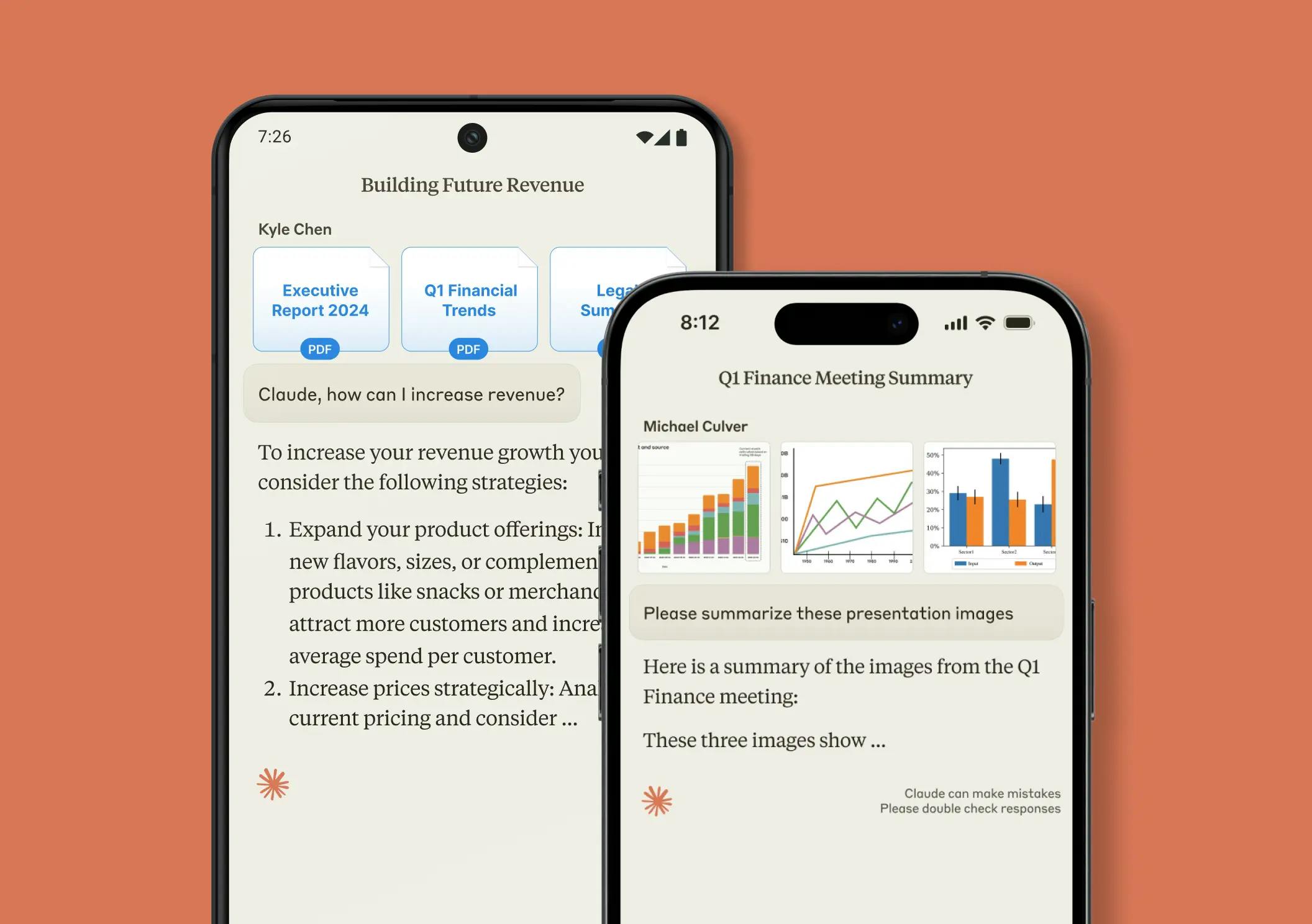

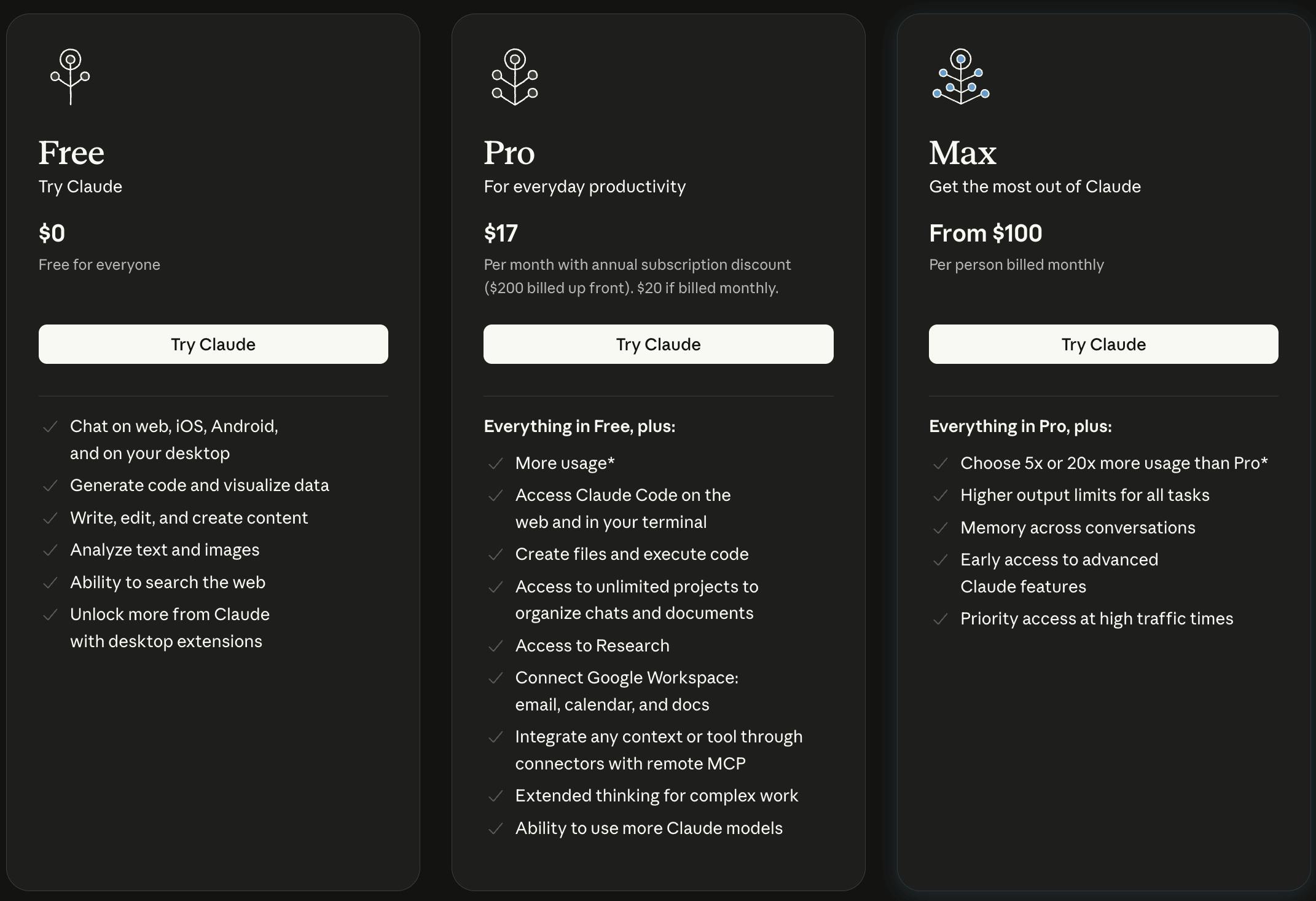

As of February 2026, Claude was available to individual users through three plans — Free ($0/month), Pro ($17-$20/month), and Max ($100+/month). Paid subscriptions allow higher usage limits and early access to advanced features. Users can also interact with Claude through mobile formats.

In 2024, Anthropic launched Claude apps for iOS and Android, a year after OpenAI released its own ChatGPT apps. Anthropic also offers developers the ability to build with Claude APIs and charges based on the number of input and output tokens. Claude models are also available on Amazon Bedrock, Google Cloud's Vertex AI, and Microsoft Foundry, where they can be used to build custom AI applications. As Claude has advanced in its helpfulness, Anthropic has remained committed to limiting its harmfulness.

In an interview in August 2023, Dario Amodei noted that:

“A mature way to think about these things is not to deny that there are any costs, but to think about what the costs are and what the benefits are. I think we’ve been relatively responsible in the sense that we didn’t cause the big acceleration that happened late last year and at the beginning of this year.”

In September 2023, the company published its Responsible Scaling Policy, a 22-page document that defines new safety and security standards for various model sizes. In July 2024, Anthropic announced a new initiative for soliciting and funding third-party evaluations of its AI models to abide by its Responsible Scaling Policy.

Past Models

Each foundation model company typically releases new versions of models, both iterations of existing models (e.g., OpenAI’s GPT-3.5, GPT-4, GPT-4o) as well as models around different focuses (e.g,. GPT for language, DALL·E for images, Sora for video). Anthropic has had similar iterations of its models over time.

For example, Claude Instant was released alongside Claude itself in March 2023, and described by Anthropic as a “lighter, less expensive, and much faster option”. Initially released with a context window of 9K tokens, the same as that of Claude, Claude Instant is described by some users as being less conversational, while equally capable compared to Claude.

In August 2023, an API for Claude Instant 1.2 was released. Using the strengths of Claude 2, its context window expanded to 100K tokens — enough to analyze the entirety of “The Great Gatsby” within seconds. Claude Instant 1.2 also demonstrated higher proficiency across a variety of subjects, including math, coding, and reading, among other subjects, with a lower risk of hallucinations and jailbreaks.

In September 2023, the company published its Responsible Scaling Policy, a 22-page document that defines new safety and security standards for various model sizes. In July 2024, Anthropic announced a new initiative for soliciting and funding third-party evaluations of its AI models to abide by its Responsible Scaling Policy.

Market

Customer

Anthropic’s customer segments include consumers, developers, and enterprises. Users can chat with Claude through its web platform, Claude.ai, and its mobile apps. As of the second quarter of 2025, Claude has 30 million monthly active users globally, with 2.9 million mobile app users.

In 2024, the largest user demographics include users aged 25-34 (61.6% male, 38.4% female), with the United States representing 36.1% of traffic, followed by India (8.3%) and the United Kingdom (4.7%). As of February 2026, Claude was available in 176 countries, significantly expanding from its initial US/UK launch.

According to Anthropic’s Economic Index, the Claude users span a wide range of knowledge professions — from computer programmers to editors, tutors, and analysts. As of February 2025, the largest occupational categories as a percentage of all Claude prompts included:

Computer & Mathematical (37.2%)

Arts & Media (10.3%)

Education & Library (9.3%)

Office & Administrative (7.9%)

Life, Physical & Social Science (6.4%)

Business & Financial (5.9%)

As of February 2026, Anthropic publicly lists over 155 companies and organizations among its customers. As usage deepens, Anthropic expects enterprises to become the primary revenue driver. As Amodei put it:

“Startups are reaching $50 million+ annualized spend very quickly… but long-term, enterprises have far more spend potential.”

This enterprise strategy has shown results. Anthropic tripled the number of eight and nine-figure deals signed in 2025 compared to 2024. Further, accounts served with $100K+ in ARR grew by 7x in 2025 compared to all of 2024, reflecting accelerated adoption across large organizations. By September 2025, its enterprise customer base had grown to over 300K businesses, a 300x increase from the fewer than 1K customers just two years prior. However, this growth comes with significant revenue concentration risks, with substantial portions tied to GitHub Copilot and Cursor.

Major partnerships include a five-year strategic deal with Databricks, bringing Claude directly to over 10K companies. The partnership enables enterprises to build domain-specific AI agents on their unique data with end-to-end governance. In September 2025, Microsoft announced a partnership with Anthropic, bringing Claude into some applications of Microsoft’s Copilot, which had previously been powered solely by OpenAI models.

In August 2025, Anthropic bundled Claude Code into enterprise plans, responding to enterprise demand for integrated coding tools.

Source: Anthropic [via arXiv]

Consumers

Consumers engage with Claude through the Claude.ai platform and mobile apps for iOS and Android. Use cases range from writing and summarization to study help, coding assistance, and project planning.

Anthropic offers a freemium model:

Free access to Claude Sonnet 4.5

$20/month subscriptions for higher usage limits (~45 messages every 5 hours)

Features such as Claude Code (for writing/debugging code) and Artifacts (persistent visual workspaces) have expanded Claude’s utility for students, creators, and solo workers.

Developers

Anthropic offers multiple options for developers to build with Claude, including a dedicated API, Claude Code, and integrations via Amazon Bedrock and Google Cloud’s Vertex AI. The Claude API is priced on a usage-based model and gives developers access to Claude’s full model family. Claude Code, available through Claude’s main interface, allows developers to write, edit, debug, and ship code end-to-end. It supports VS Code and JetBrains extensions, displaying edits directly in familiar editor interfaces, and includes GitHub integration for automated PR responses. The platform has been adopted by major development tools including GitHub Copilot, Cursor, Replit, and Bolt.new.

For individual users, Anthropic also offers a high-usage “Claude Max” tier. The Max plan costs $100/month for 225 messages every 5 hours, or $200/month for 900 messages every five hours, and higher limits for Claude Opus 4.6.

Features such as Claude Code, Artifacts (persistent visual workspaces), and 1 million token context windows have expanded Claude's utility for students, creators, and solo workers.

Enterprises

Anthropic has expanded Claude’s adoption among enterprises, offering both packaged SaaS plans and custom integrations. The Team plan, priced at $20/user/month as of February 2026, allows organizations to use Claude collaboratively, with access controls and shared context across teammates. Organizations can also purchase premium seats that include Claude Code for $100/user/month, with all other features remaining the same as the Standard seat. For larger customers, the Enterprise plan unlocks custom usage tiers, fine-tuning options, SSO support, and security reviews.

A number of case studies highlight Claude’s usage across different sectors:

Lyft has integrated Claude for customer care, reducing support resolution time by 87% and piloting new AI-powered rider and driver experiences.

European Parliament adopted Claude to power “Archibot,” making 2.1 million official documents searchable and reducing research time by 80%.

Amazon’s Alexa+ is partially powered by Claude models, incorporating Anthropic’s jailbreaking resistance and safety tools.

Altana - experienced 2-10x development velocity increase using Claude Code and Claude.

Rakuten - Uses Claude Code for autonomous coding, achieved seven hours of continuous autonomous work, and reduced feature delivery from 24 days to five days.

Source: Anthropic

As a general AI assistant, Claude’s services aren’t limited to the industries and use cases mentioned above. For example, Claude has powered Notion AI due to its unique writing and summarization traits. It has also served as the backbone of Sourcegraph, a code completion and generation product; Poe, Quora’s experimental chatbot; and Factory, an AI company that seeks to automate parts of the software development lifecycle.

Market Size

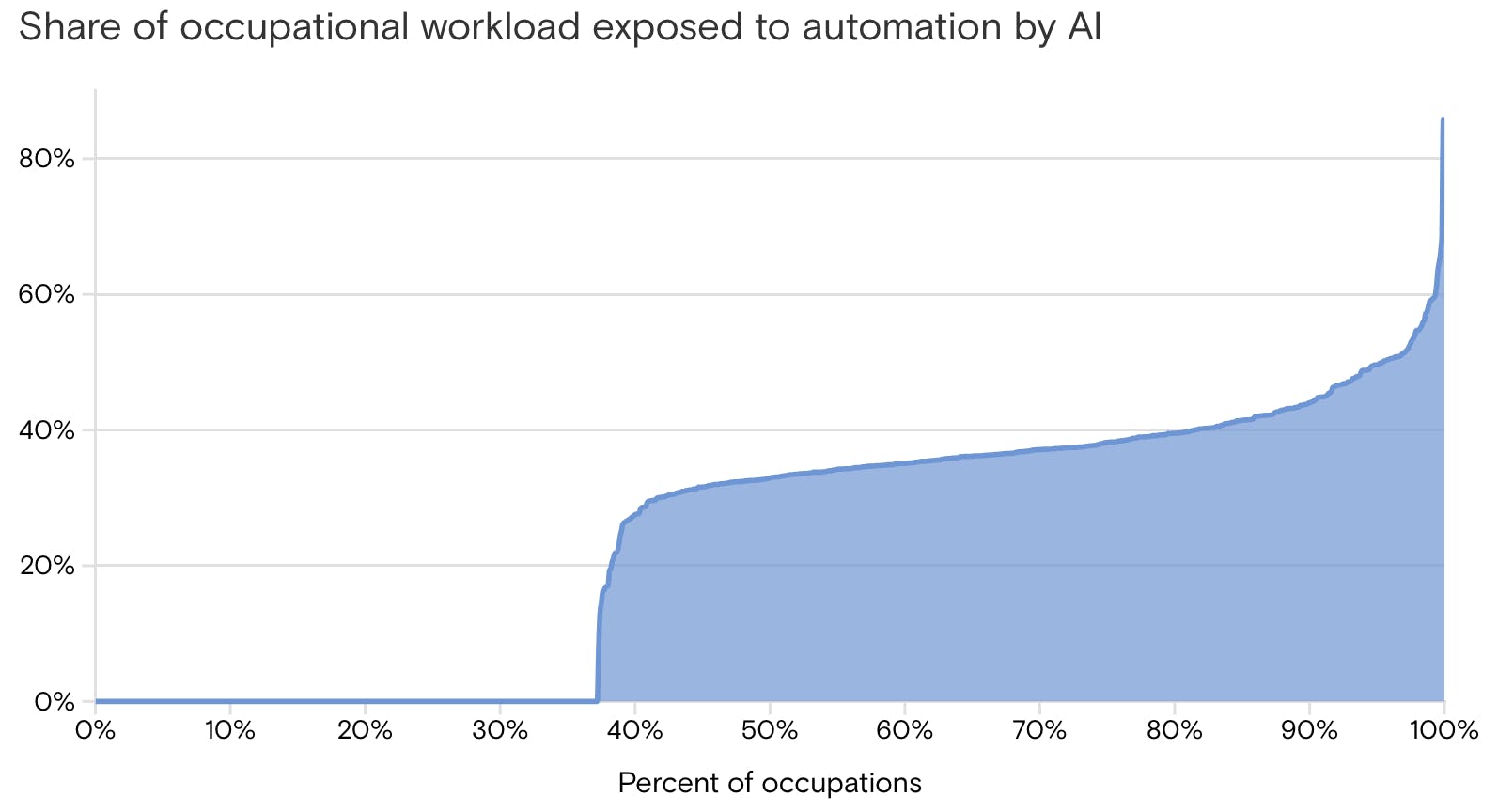

Generative AI is projected to significantly impact many industries. In June 2023, generative AI was reported to have the capacity to automate activities accounting for 60%-70% of employees’ time and is projected to enable productivity growth of 0.1%-0.6% annually through 2040. One report released in May 2024 found that already 75% of knowledge workers use AI at work. Out of more than 1.2 million US firms, large firms with more than 250 employees saw adoption of AI more than double from the end of 2024 to reach 9.2% in the second quarter of 2025. In 2025, 88% of organizations regularly used AI in at least one business function, although only 39% of them reported measurable EBIT impact at the enterprise level, indicating a gap between adoption and realized value.

Source: Goldman Sachs

Consumer-oriented chatbots have experienced unprecedented growth. ChatGPT, OpenAI's public chatbot, reached 100 million users just two months after its launch in November 2022, making it "the fastest-growing consumer internet app of all time" by that metric. By comparison, it took Facebook, Twitter, and Instagram between two to five years after launch to reach the same milestone. The growth trajectory has accelerated dramatically since then, with ChatGPT reaching 800 million weekly active users as of 2025, doubling from 400 million in February 2025. The platform serves over 1 billion messages daily, showcasing how deeply integrated it has become in personal and professional workflows.

Source: CB Insights

The generative AI market has experienced explosive growth since ChatGPT's launch. The global generative AI market size was valued at $16.9 billion in 2024 and is projected to reach $109.4 billion by 2030, growing at a compound annual growth rate of 37.6% during the forecast period. Alternative market research projects even more aggressive growth, with the market reaching $71.4 billion in 2025 and surging to $890.6 billion by 2032 at a CAGR of 43.4%. This represents a significant acceleration from Bloomberg Intelligence's 2023 projection of the market growing from $40 billion in 2022 to $1.3 trillion by 2032.

Growth is likely to be driven by task automation, with customer operations, marketing, software, and R&D, accounting for 75% of use cases. Across these industries, some estimate that generative AI has the potential to add $4.4 trillion in annual value. It is estimated that half of all work activities will be automated by 2045.

Enterprise spending patterns reflect this projected impact. Enterprise AI spend was expected to account for 30% of overall IT budget growth, rising by 5.7% in 2025 on overall IT budget increases of 1.8%. Outside of traditional IT operations, AI spending at the beginning of 2025 was projected to grow by 52%, with an average 3.3% of revenue being allocated to AI for retail and consumer products companies.

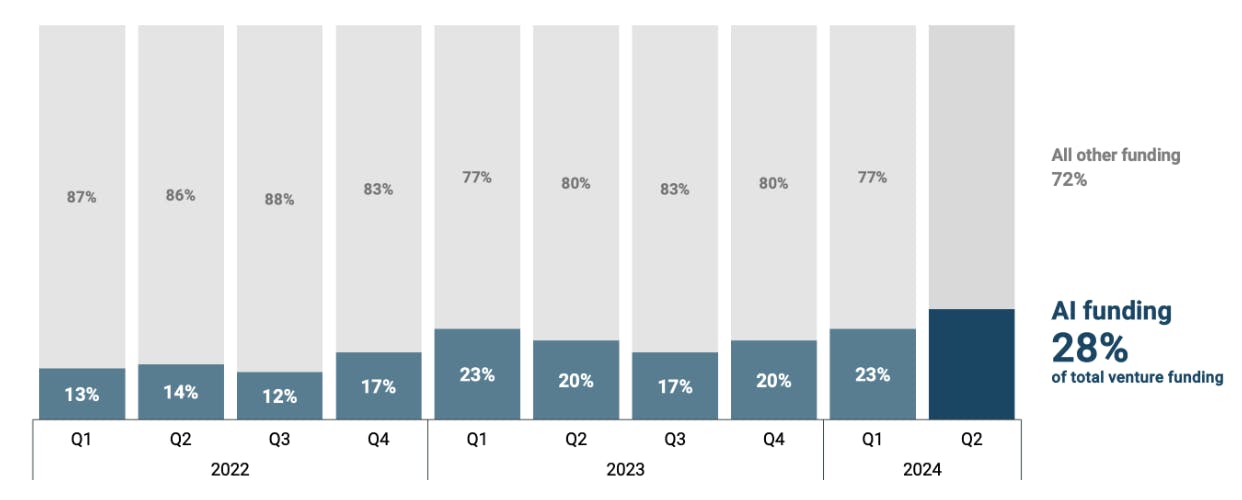

The AI funding landscape has reached historic levels, with 2024 marking a breakout year for investment in AI companies. Global venture capital funding for AI exceeded $100 billion in 2024, representing an increase of over 80% from $55.6 billion in 2023. Nearly 33% of all global venture funding was directed to AI companies, making artificial intelligence the leading sector for investments and surpassing even peak global funding levels from 2021.

Generative AI specifically attracted approximately $45 billion in venture capital funding in 2024, nearly doubling from $24 billion in 2023. Late-stage venture capital deal sizes for generative AI companies skyrocketed from $48 million in 2023 to $327 million in 2024, highlighting investor confidence in the sector's potential.

The investment momentum has continued into 2025, with AI startups receiving 53% of all global venture capital dollars invested in the first half of the year. In the United States specifically, this percentage jumps to 64%, with AI startups also comprising nearly 36% of all funded startups in the country.

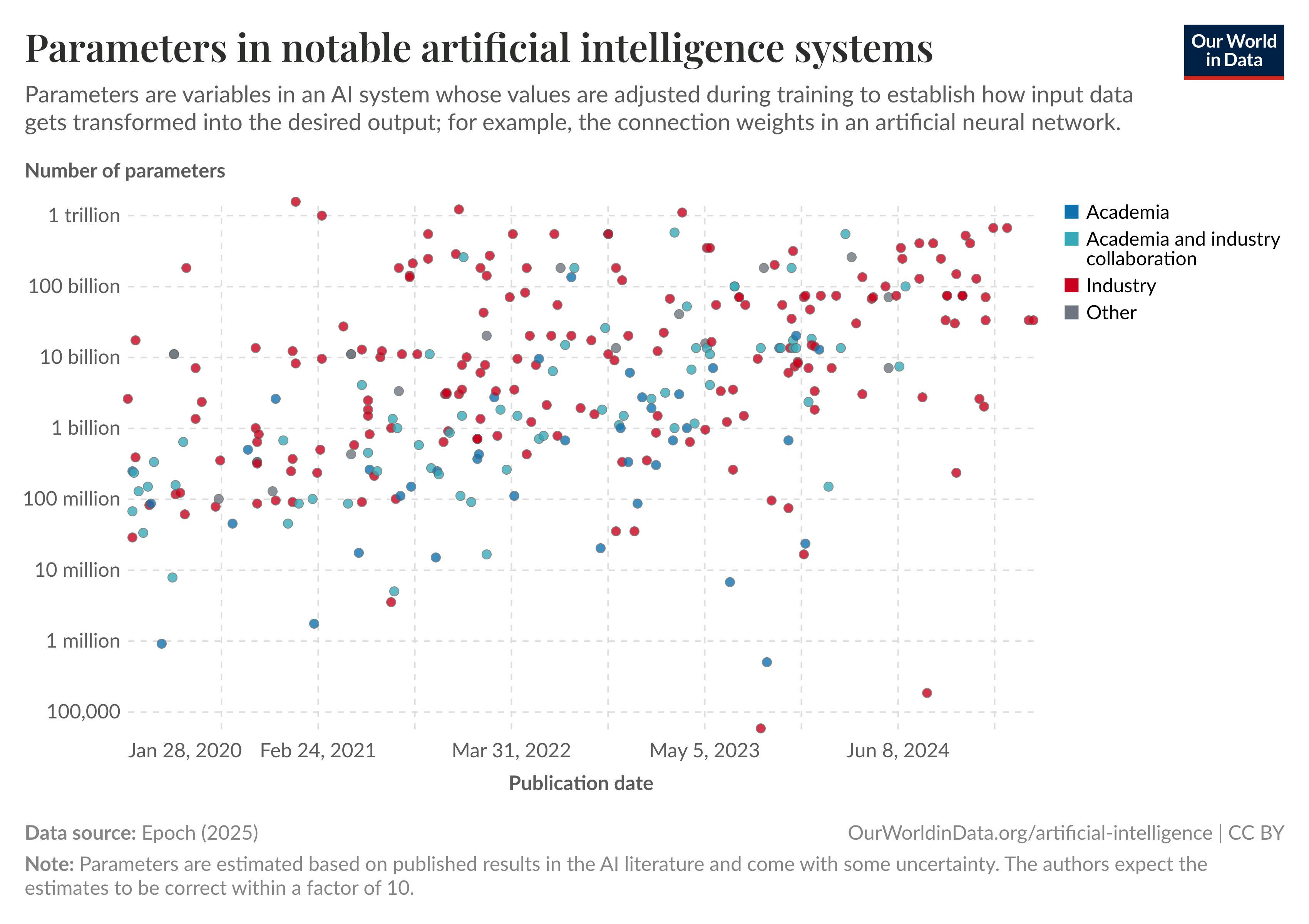

Source: Axios

A key metric underscoring the rapid growth in generative AI is the number of parameters in commercial NLP models. One of the first transformer models, Google’s BERT-Large, was created with 340 million parameters in 2018. Within just a few years, the parameter size of leading AI models has grown exponentially. Google’s PaLM 2, released in May 2023, had 340 billion parameters at its maximum size. Some rumors have even suggested that GPT-4 had nearly 1.8 trillion parameters, though OpenAI has not disclosed the actual number.

Source: Our World In Data

The number of parameters used within a model is one factor in determining its accuracy across different tasks. While most models excel at classifying, editing, and summarizing text and images, their capacity to successfully execute tasks autonomously varies greatly. As CEO Dario Amodei put it, “they feel like interns in some areas and then they have areas where they spike and are really savants.”

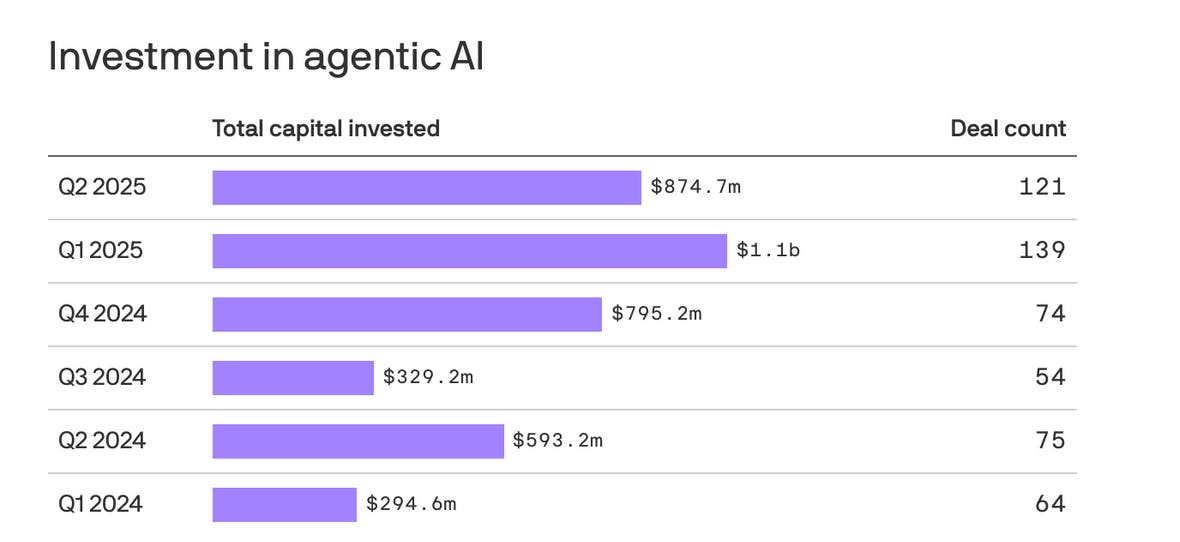

Source: HAI Stanford

The emergence of agentic AI represents a shift beyond traditional chatbot applications. Agentic AI systems decompose complex goals into subtasks, use tools, maintain states across extended interactions, and self-correct based on feedback, allowing them to move closer to autonomous execution of business processes and workflows. The agentic AI market is projected to grow from approximately $7.6 billion in 2025 to $199.1 billion by 2034, growing at a CAGR of 43.8% during the forecast period. By 2028, it is projected that 33% of enterprise applications will feature agentic AI, up from less than 1% in 2024. As of 2025, 99% of organizations plan to deploy agentic AI eventually, though only 11% have done so already.

CEO Dario Amodei expressed his fear about the potential impact of increasingly capable models in the long term by stating that:

“No one cares if you can get the model to hotwire [a car] — you can Google for that. But if I look at where the scaling curves are going, I’m actually deeply concerned that in two or three years, we’ll get to the point where the models can… do very dangerous things with science, engineering, biology, and then a jailbreak could be life or death.”

Competition

OpenAI

OpenAI is both Anthropic’s largest competitor and the former employer of many members of its founding team. Founded in 2015, OpenAI is best known for its development of the Generative Pre-trained Transformers (GPT). With its first model released in 2018, its public model ChatGPT, powered by GPT-3.5, amassed well over 100 million monthly active users within two months of its launch.

ChatGPT serves 700 million weekly users as of October 2025 across consumers and enterprises, with ChatGPT attracting 1.5 million enterprise customers across its Enterprise, Team, and Edu offerings. The platform serves over 10 million paying subscribers across its consumer plans, including 15.5 million Plus subscribers as of May 2025.

In March 2025, the company closed the largest private funding round in tech history as of December 2025, raising $40 billion at a $300 billion post-money valuation, led by SoftBank's $30 billion contribution. OpenAI raised an additional $8.3 billion in August 2025, led by Dragoneer Investment Group's $2.8 billion check. As of February 2026, OpenAI has raised approximately $79 billion in total funding, and it is reportedly seeking to raise up to $100 billion in funding at a valuation of up to $830 billion.

OpenAI completed a corporate restructuring in October 2025, converting its for-profit arm into a public benefit corporation that remains controlled by the OpenAI Foundation nonprofit. Microsoft holds a 27% stake and retained access to OpenAI's technology through 2032. The deal also ends Microsoft's exclusive cloud rights.

The company's annual recurring revenue jumped to $13 billion in August 2025, with projections to reach $12.7 billion in total revenue for 2025 announced in May 2025. The company is exploring secondary sales that could value it at up to $500 billion, underscoring investor confidence despite the company's substantial losses. OpenAI generated $3.7 billion in revenue in 2024, representing more than a tripling from $1 billion in 2023. OpenAI is projected to complete $11.6 billion in sales in 2025, with executives predicting revenue could reach $100 billion by 2029, comparable to annual sales figures of major companies like Nestlé and Target.

Like Anthropic, OpenAI offers an API platform for its foundation models. In December 2025, OpenAI released GPT-5.2, its latest frontier model. The release followed an internal "code red" memo as ChatGPT traffic dipped, and amidst concerns about losing consumers to Google. GPT-5.2 is available in three variants: Instant for routine queries, Thinking for complex structured work, and Pro for maximum accuracy on difficult problems. OpenAI claimed GPT-5.2 sets new benchmark scores in coding, math, science, vision, and long-context reasoning.

Its AI chatbot, ChatGPT, allows users to interact with GPT-5.2 for free. With a paid plan priced at $20/month, users can also access GPT-4.1 and GPT-4o, as well as OpenAI’s image generator, DALL-E. In December 2024, OpenAI launched a Pro plan priced at $200 per month, with extended usage limits and advanced features, similar to Claude’s Max plan.

In February 2026, OpenAI followed up GPT-5.2 with the release of GPT-5.3-Codex, which it called “the most capable agentic coding model to date”. It claimed that the model “advances both the frontier coding performance of GPT-5.2-Codex and the reasoning and professional knowledge capabilities of GPT-5.2, together in one model, which is also 25% faster.” This is in direct competition with Claude Opus 4.6, which released the same day, with Opus 4.6 leading on reasoning and code-fix benchmarks, while GPT-5.3-Codex leading on execution-focused tasks and environment interaction.

The same week as it released GPT-5.3-Codex to compete with Claude Opus 4.6, OpenAI also released OpenAI Frontier to compete with Claude Cowork, which it described as “a new platform that helps enterprises build, deploy, and manage AI agents that can do real work”, naming companies like State Farm, HP, Inutit, and Uber among the early adopters of the platform.

OpenAI has also invested in multiple safety initiatives, including a new superalignment research team announced in July 2023 and a new Safety and Security Committee introduced in May 2024. However, current and former employees have continued to criticize OpenAI for deprioritizing safety. In spring 2024, OpenAI’s safety team was given only a week to test GPT-4o. “Testers compressed the evaluations into a single week, despite complaints from employees,” in order to meet a launch date set by OpenAI executives. Days after the May 2024 launch, Jan Leike, OpenAI’s former Head of Alignment, became the latest executive to leave the company for Anthropic, claiming that “over the past years, safety culture and processes [had] taken a backseat to shiny products” at OpenAI.

In June 2024, a group of current and former employees at OpenAI alleged that the company was “recklessly racing” to build AGI and “used hardball tactics to prevent workers from voicing their concerns about the technology.” Along with former employees of DeepMind and Anthropic, they signed an open letter calling for AI companies to “support a culture of open criticism” and to allow employees to share “risk-related concerns.” In addition, one of OpenAI’s co-founders, Ilya Sutskever, left the company in May 2024 and by June 2024 had started a new company called Safe Superintelligence Inc. (SSI), stating that safety is in the company’s name because it is “our mission, our name, and our entire product roadmap because it is our sole focus.”

In January 2025, the Trump administration announced the Stargate project, a $500 billion initiative aimed at advancing AI infrastructure in the US, through the construction of over 20 large-scale data centers nationwide. The project will be funded by Softbank and MGX, while OpenAI, Oracle, Microsoft, Nvidia, and Arm will be responsible for the operational and technological contribution.

xAI

Founded in July 2023 by Elon Musk, xAI is a frontier AI lab spun out of Musk’s broader X/Twitter ecosystem. From its inception, xAI has aimed to build a “maximum truth-seeking AI” that, in Musk’s words, “understands the universe.” The company released its first model, Grok-1, in November 2023, integrated directly into X’s premium subscription tiers. Since then, xAI has scaled both model capabilities and its underlying infrastructure.

The company's trajectory took a dramatic turn in March 2025 when Musk announced that xAI had formally acquired X Corp., consolidating the social media platform and AI startup into a single entity valued at $80 billion. The all-stock transaction valued X at $33 billion while positioning xAI to leverage X's vast data streams for model training. "xAI and X's futures are intertwined," Musk explained, emphasizing how the merger would "combine the data, models, compute, distribution, and talent" to create competitive advantages that standalone AI companies cannot match.

xAI has reached a significant amount of funding. In July 2025, the company raised $10 billion, split evenly between $5 billion in equity and $5 billion in secured notes and term loans, with SpaceX contributing $2 billion to the equity portion. In December 2025, xAI was expected to close a $15 billion funding round at a $230 billion pre-money valuation, and ended up closing $20 billion in funding in an upsized Series E in January 2026, which brought its total funding to $47.2 billion. Then, in February 2026, the company was acquired in a megamerger by SpaceX, another Elon Musk company, in a deal that valued xAI at $250 billion and the combined entity at $1.25 trillion.

In July 2025, xAI unveiled Grok 4 and Grok 4 Heavy, which the company claims are "the most intelligent models in the world." The models feature native tool use and real-time search integration, with Grok 4 Heavy designed for the most challenging tasks. These releases build on February's Grok 3 launch, which Musk stated was trained with "10x more computing power" than its predecessor using the company's Colossus supercomputer with around 200K GPUs. As of December 2025, xAI was operating its own 200K+ H100 GPU cluster in Memphis, known as the Colossus supercomputer, with plans for expanding it to one million GPUs in 2026. xAI expects to generate $1 billion in gross revenue by the end of 2025, with projections reaching $13 billion by 2029.

In July 2025, the US Department of Defense announced contract awards of up to $200 million for AI development at xAI, along with Anthropic, Google, and OpenAI. That same month, xAI launched "Grok for Government," making its models available to US government customers.

Musk has called Grok a "maximally truth-seeking" AI in a bid to set it apart from its rivals. However, it has faced controversy and criticism for allowing too much license for sexualized images in 2026, and also briefly expressing controversial views during a brief period in 2025, although the company has since apologized and corrected the underlying problem.

Google Deepmind

Founded in 2010, DeepMind was acquired by Google in 2014 and later merged with Google Brain in 2023 to accelerate AI development. The combined entity, known as Google DeepMind, represents Google's primary threat to both OpenAI and Anthropic in the frontier AI model race.

In November 2025, Google launched Gemini 3, its most advanced foundation model. Gemini 3 achieved a record 1501 Elo on LMArena and scored 37.4% on Humanity's Last Exam, surpassing GPT-5 Pro's previous record of 31.64%. The release reportedly triggered an internal "code red" at OpenAI, prompting the accelerated release of GPT-5.2.

Google followed with Gemini 3 Flash in December 2025, offering robust model capabilities at significantly lower cost. Gemini 3 Flash matches the performance of Gemini 3 Pro and GPT-5.2 on many benchmarks while being three times faster. Since launching Gemini 3, Google has been processing over one trillion per day on its API.

Alongside Gemini 3, Google released Antigravity, a new coding interface that allows for multi-pane agentic coding similar to agentic IDEs like Cursor. As of November 2025, the Gemini app now has over 650 million monthly active users, and AI Overviews reaches 2 billion monthly users. Google also noted that over 70% of its Cloud customers use its AI offerings, and 13 million developers have built with its generative models.

DeepSeek

Founded in July 2023 by Liang Wenfeng in Hangzhou, DeepSeek operates as a subsidiary of the Chinese hedge fund High-Flyer. The company's breakthrough lies not in revolutionary architecture, but in extreme efficiency. DeepSeek claims it trained its V3 model for just $6 million, far less than the $100 million cost for OpenAI's GPT-4, using approximately one-tenth the computing power consumed by Meta's comparable model, Llama 3.1.

Rather than relying on the most advanced H100 chips that U.S. export controls have restricted, DeepSeek focused on making extremely efficient use of more constrained hardware, primarily using Nvidia H20 chips designed for the Chinese market. The company's R1 model uses reinforcement learning without extensive labeled data to achieve high-quality reasoning capabilities, questioning whether the expensive training with human feedback employed by competitors was necessary.

In December 2025, DeepSeek released DeepSeek-V3.2. The V3.2-Speciale variant earned gold-medal level performance across multiple international competitions including the International Mathematical Olympiad (IMO), Chinese Mathematical Olympiad (CMO), ICPC World Finals, and International Olympiad in Informatics (IOI) 2025. DeepSeek-V3.2 scored 96.0% on AIME 2025, surpassing GPT-5's 94.6% and matching Gemini 3 Pro's 95.0%. The model is available under the MIT License at $0.028 per million input tokens, roughly one-tenth the cost of GPT-5.

DeepSeek's success has broader geopolitical implications. U.S. President Donald Trump called DeepSeek a "wake-up call" for American industry to be "laser-focused on competing to win." The company's emergence has revitalized Chinese venture capital interest in AI after three years of decline, with investors rushing to find "the next DeepSeek."

The company remains focused on research rather than immediate commercialization, allowing it to avoid certain provisions of China's AI regulations aimed at consumer-facing technologies. DeepSeek's hiring approach emphasizes skills over lengthy work experience, resulting in many hires directly from university. The company recruits individuals without computer science backgrounds to expand expertise in areas like poetry and advanced mathematics.

DeepSeek has struggled with stability issues when encouraged by Chinese authorities to adopt Huawei's Ascend chips instead of Nvidia hardware. Reports suggest that R2, the intended successor to R1, has been delayed due to slow data labeling and chip problems. Additionally, concerns have been raised about the company's training methods, as DeepSeek appears to have relied on outputs from OpenAI models, potentially violating OpenAI's terms of service.

Cohere

Cohere, which aims to bring AI to businesses, was founded in 2019 by former AI researchers Aidan Gomez, Nick Frosst, and Ivan Zhang. Gomez serves as CEO, and prior to starting Cohere, he had interned at Google Brain, where he worked under Geoffrey Hinton and co-wrote the breakthrough paper introducing transformer architecture.

In August 2025, Cohere raised $500 million at a $6.8 billion valuation, led by Radical Ventures and Inovia Capital with participation from returning investors including Nvidia, AMD Ventures, PSP Investments, and Salesforce Ventures. The round was oversubscribed, representing a 24% increase from its $5.5 billion valuation just a year earlier, in contrast to the company's previous pattern of underperforming fundraising goals. As of December 2025, Cohere's total funding stands at $1.7 billion.

Cohere provides in-house LLMs for tasks like summarization, text generation, classification, data analysis, and search to enterprise customers. These LLMs can be used to streamline internal processes, with notable customization and hyperlocal fine-tuning not often provided by competitors.

As of December 2025, the company has appointed Joelle Pineau, former Vice President of AI Research at Meta, as Chief AI Officer, and Francois Chadwick, former CFO at Uber and Shield AI, as Chief Financial Officer. Pineau, who recently departed Meta after leading its Fundamental AI Research (FAIR) lab, will direct research and product development from Cohere's new Montreal office.

Unlike OpenAI and Anthropic, Cohere's mission is centered on the accessibility and security of LLMs for enterprise use rather than the strength of its foundation models. In an interview with Scale AI founder Alexandr Wang, Cohere CEO Aidan Gomez emphasized this exact need as the key problem Cohere seeks to address:

“[We need to get] to a place where any developer like a high school student can pick up an API and start deploying large language models for the app they’re building […] If it’s not in all 30 million developers toolkit, we’re going to be bottlenecked by the number of AI experts, and there always will be a shortage of that talent.”

Cohere's platform is cloud-agnostic and can be deployed across public clouds, virtual private clouds, or on-premises, with partnerships spanning major enterprise technology providers including Oracle, Dell, Bell, Fujitsu, LG's consulting service CNS, and SAP. The company has also secured enterprise customers such as RBC, Notion, and the Healthcare of Ontario Pension Plan, which became a new investor in the latest round.

Hugging Face

Founded in 2016 and aspiring to become the “GitHub for machine learning”, Hugging Face is the main open-source community platform for AI projects. As of October 2025, it had over 2 million free and publicly available models spanning NLP, computer vision, image generation, and audio processing. Any user on the platform has the ability to post and download models and datasets, with its most downloaded models including GPT-2, BERT, and Whisper. Although most transformer models are typically too large to be trained without supercomputers, users can access and deploy pre-trained models from the platform.

Hugging Face operates on an open-core business model, meaning all users have access to its public models. Paying users get additional features, such as higher rate limits, its Inference API integration, additional security, and more. Compared to Anthropic, its core product is more community-centric. Clem Delangue, CEO of Hugging Face, noted in an interview:

“I think open source also gives you superpowers and things that you couldn't do without it. I know that for us, like I said, we are the kind of random French founders, and if it wasn't for the community, for the contributors, for the people helping us on the open source, people sharing their models, we wouldn't be where we are today.”

Hugging Face’s relationship with its commercial partners is less direct than its competitors, including Anthropic. Nonetheless, it has raised $395.2 million as of February 2026, with its last round having been a $235 million Series D in August 2023. This put the company at a valuation of $4.5 billion, which was double its valuation during its previous round in May 2023 and more than 100x its reported ARR. As a platform spreading large foundation models to the public (albeit models 1-2 generations behind state-of-the-art models such as those being developed by Anthropic and OpenAI), Hugging Face represents a significant player in the AI landscape. In 2022, Hugging Face, with the help of over 1K researchers, released BLOOM, which the company claims is “the world’s largest open multilingual language model.”

Other Research Organizations

Anthropic also competes with various established AI research labs backed by large tech companies; most notably, Meta AI and Microsoft Azure AI.

Meta: In April 2025, Meta introduced three new models in its Llama 4 family: Scout, Maverick, and Behemoth. Scout and Maverick are publicly available via Llama.com and platforms like Hugging Face, while Behemoth remains in training. As of May 2025, Meta AI, the assistant integrated into WhatsApp, Messenger, and Instagram, runs on Llama 4 in 186 countries.

Prior to this, Meta released LLaMA 3 in April 2024. In July 2023, Meta announced that it would be making LLaMA open-source. “Open source drives innovation because it enables many more developers to build with new technology”, posted Mark Zuckerberg. “It also improves safety and security because when software is open, more people can scrutinize it to identify and fix potential issues.” While LLaMA 3 lags behind GPT-4 in reasoning and mathematics, it remains one of the largest open-source models available and rivals major models in some performance aspects, making it a likely choice for independent developers.

Meta's AI strategy has shifted in late 2025. The company is reportedly developing a new frontier model codenamed Avocado that may abandon the open-source tradition, instead adopting a closed-source commercial approach to compete directly with OpenAI, Google, and Anthropic. This contradicts CEO Mark Zuckerberg's previous public stance championing open source. The Avocado release is targeted for Q1 2026. Alexandr Wang, former CEO of Scale AI, was named Meta's Chief AI Officer and in August became head of an elite unit called TBD Lab, where Avocado is being developed. Meta has raised its 2025 capital expenditure guidance to $70-72 billion to fund AI infrastructure.

Microsoft Azure: Among its hundreds of services, Microsoft Azure’s AI platform offers tools and frameworks for users to build AI solutions. While Azure offers a portfolio of AI products instead of its own foundation models, these products include top models like GPT-4o and LLaMA 2, which can be used for services ranging from internal cognitive search to video and image analysis. Unlike Claude, which is low-code and accessible for teams of all sizes, Azure primarily targets developers and data scientists capable of coding on top of existing models. As such, Microsoft Azure AI serves as an indirect competitor to Anthropic, increasing the accessibility and customizability of foundation AI models.

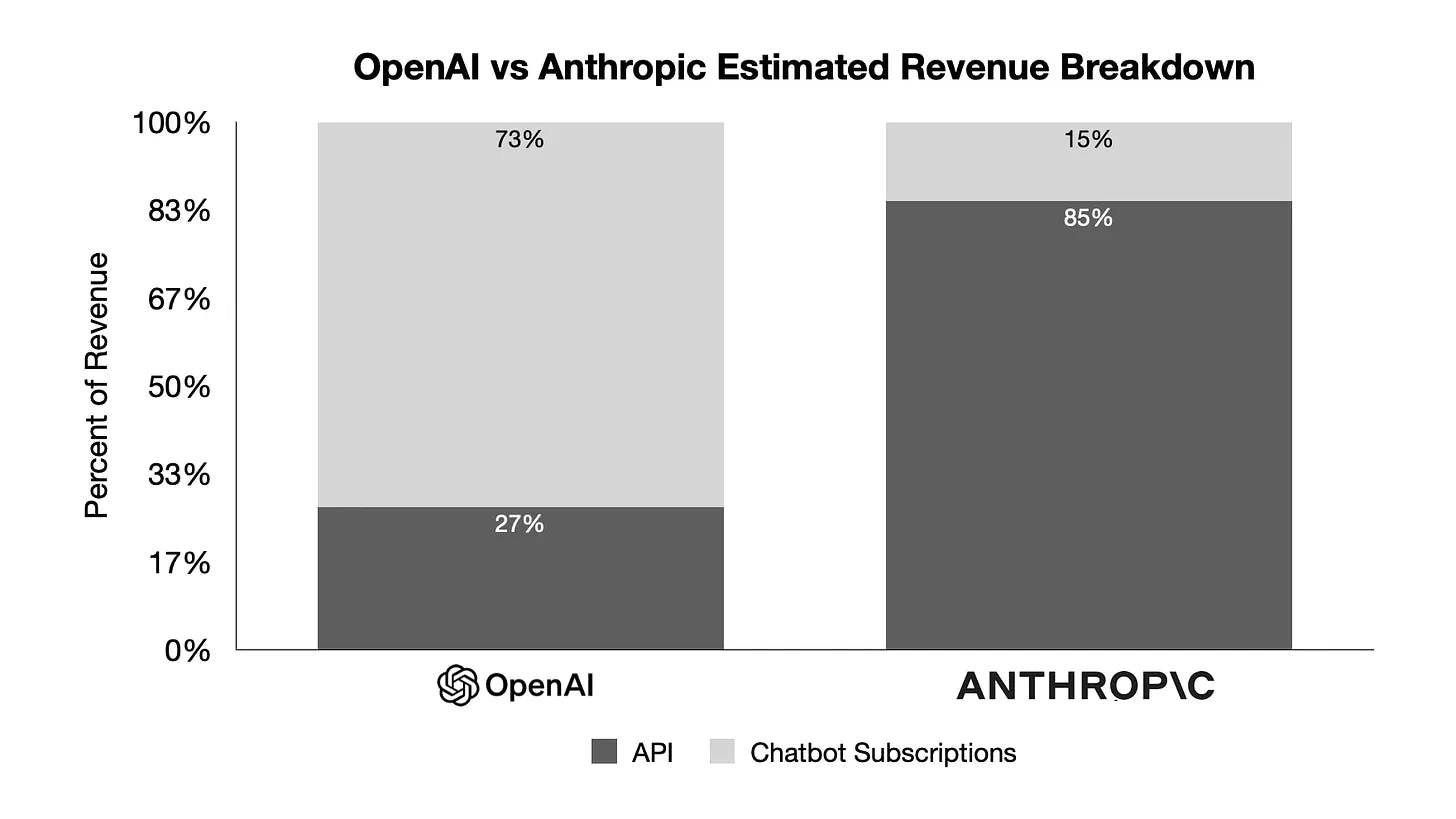

Business Model

Anthropic generates revenue through both usage-based APIs and subscription-based access to its Claude models. According to internal estimates, API sales accounted for the vast majority of the company's revenue in 2024: 60–75% from third-party API integrations, 10–25% from direct API customers, 5% from chatbot subscriptions, and 2% from professional services.

Source: Tanay’s Newsletter

Anthropic offers three main subscription tiers for individual users:

Free: Access to Claude via web and mobile, with support for image and document queries using Claude Sonnet 4.6.

Pro ($20/month or $17/month annually): Adds 5x higher usage limits, Projects functionality, and access to Claude Opus 4.6 and other advanced models.

Max ($100–$200/month): Introduced in April 2025, designed for power users with two tiers. The $100/month tier provides 5x higher rate limits than Pro, while the $200/month tier offers 20x higher usage limits, priority access during peak traffic, and early access to new features.

Source: Anthropic

For teams, Anthropic introduced a Team Plan in May 2024, priced at $20/user/month. It includes admin controls, usage consolidation, and shared Claude access, making it suitable for organizations deploying AI broadly across departments. Claude Code access can be added by purchasing premium seats at $100/user/month. Custom Enterprise Plans are available for higher-volume clients, with reported pricing of $60 per seat for a minimum of 70 users and a 12-month contract, resulting in a minimal Enterprise plan cost of approximately $50K annually.

Anthropic's API pricing varies significantly by model tier, representing a balance of accessibility with computational costs of increasingly powerful models. Claude Opus 4.6 is priced at $5/$25 per million tokens (input/output), representing a 67% reduction from Claude Opus 4’s $15/$75 pricing. Claude Haiku 4.5 is priced at $1/$5 per million tokens, positioned as a cost-efficient option for high volume tasks compared to Claude Sonnet 4.5, which maintained the $3/$15 per million tokens pricing that Claude Sonnet 4.0 had. Extended context pricing is $6/$22.50 per million tokens on requests exceeding 200K tokens).

The company also offers several cost optimization features:

Batch Processing: 50% discount on both input and output tokens for asynchronous processing

Prompt Caching: Up to 90% cost savings with intelligent caching of repeated prompts

In July 2025, Anthropic introduced new weekly rate limits to manage computational demand, particularly for Claude Code usage. The limits affect less than 5% of subscribers based on usage patterns as of December 2025:

Pro Plan: 40 to 80 hours of Sonnet 4 through Claude Code weekly

Max $100 Plan: 140 to 280 hours of Sonnet 4 and 15 to 35 hours of Opus 4 weekly

Max $200 Plan: 240 to 480 hours of Sonnet 4 and 24 to 40 hours of Opus 4 weekly

Max subscribers can purchase additional usage beyond rate limits at standard API rates. These limits respond to "unprecedented demand" for Claude Code since its launch, according to Anthropic. In November 2025, Anthropic removed the separate Opus-specific usage caps for Claude and Claude Code that previously restricted access even for paid subscribers. Claude Opus 4.5 tokens are now counted toward overall usage quotas, instead of using strict monthly limits, allowing Claude Opus 4.5 to be increasingly used for daily work without hitting cutoffs.

In December 2025, Anthropic launched Claude for Nonprofits, allowing nonprofit organizations discounts of up to 75% on Team and Enterprise plans. Connectors to Benevity, Blackbaud, and Candid, three nonprofit software providers, were also announced as the company looks to expand into a new customer segment in a manner aligned with its public benefit corporation mission.

Since its open-sourcing of MCP in November 2024, Anthropic has continued to invest in the developer ecosystem. In September 2025, Anthropic released the Claude Agent SDK to allow third-party developers to build agent solutions on top of Claude. In October 2025, Petri, an automated evaluation and auditing tool for AI systems, was open-sourced by the company to allow researchers to more easily explore model behavior. While not directly generating revenue for the company, these initiatives drive broader platform adoption with developers and researchers and reflect efforts to continue strengthening the broader Claude ecosystem.

Anthropic announced plans to invest $50 billion in computing infrastructure in November 2025. The custom data centers will be built in partnership with Fluidstack, with Texas and New York being the two announced sites as of December 2025. This long-term investment marks a departure from relying solely on strategic cloud partners, like Amazon.

In December 2025, Anthropic completed its first-ever acquisition, purchasing Bun. Bun was a high-performance, all-in-one developer toolkit that improved the experience of building and testing applications for JavaScript and TypeScript. At the time of the acquisition, Anthropic committed to keep Bun open-source and MIT-licensed. The acquisition positions Anthropic to define the runtime standard for agentic systems, focusing on embedding Anthropic in infrastructure where AI agents will increasingly operate.

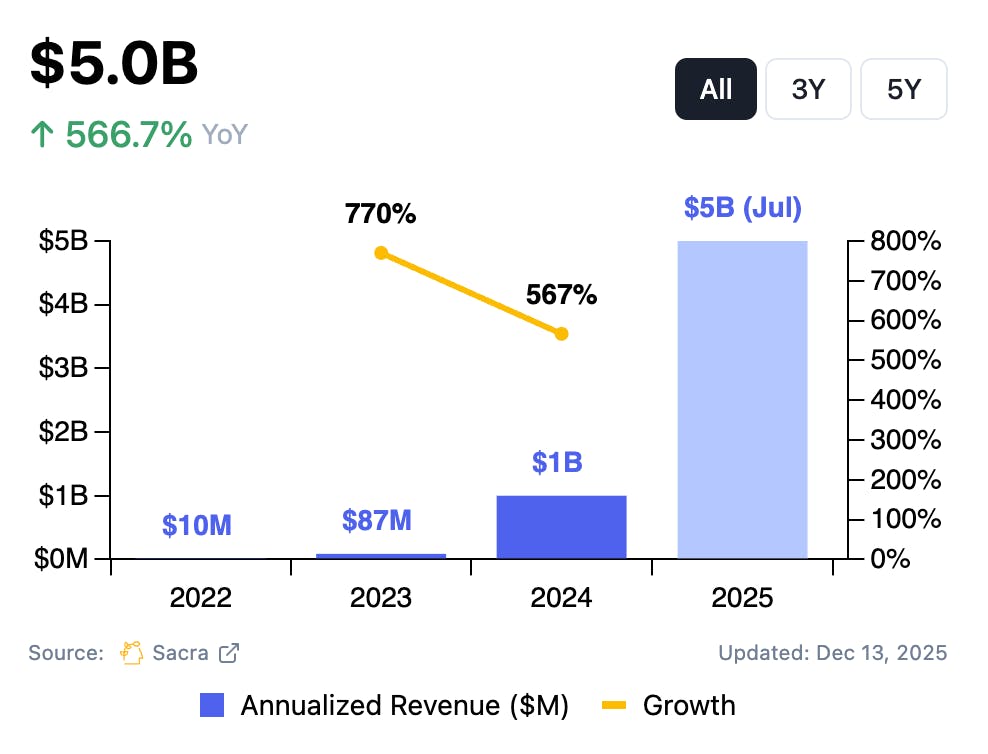

Traction

Anthropic has seen commercial momentum across strategic partnerships, enterprise adoption, and revenue growth. In October 2025, the company is estimated to have reached $5 billion in annual recurring revenue, up from $3 billion in May 2025, $1.4 billion in March 2025, and $1 billion at the end of 2024. In December 2025, the company projected hitting $9 billion in ARR by the end of the year. Revenues from APIs hit $3.8 billion and Claude Code approached generating $1 billion in annualized revenue, up from $400 million in July.

As of a November 2025 report, Anthropic expects to reach $20-26 billion ARR in 2026, with targets of $70 billion ARR by 2028. With these projections, the company expects to reach profitability by 2027-2028, with 77% gross profit margins and $17 billion in positive cash flow. The gross profit margin in 2024 was -94%.

Source: Sacra

Strategic Partnerships

As of 2025, Anthropic's largest infrastructure partner remains Amazon. In November 2024, Amazon deepened its relationship with Anthropic by committing an additional $4 billion in funding, bringing its total investment to $8 billion. As part of the expanded agreement, AWS became Anthropic's primary cloud and training partner, with Anthropic committing to train future foundation models on AWS Trainium and Inferentia chips.

Anthropic has also continued to increase ties with Google. In October 2025, the company announced an expansion of its Google Cloud partnership, securing access to up to one million TPUs and over one gigawatt of compute capacity by 2026, representing a deal worth tens of billions of dollars. Google owns a 14% stake in Anthropic and has invested $3 billion in the company, with rumors of a further $3 billion investment being reported on in November 2025.

A strategic partnership with Microsoft and Nvidia has also expanded Anthropic’s infrastructure capacity and multi-cloud positioning. Announced in November 2025, Microsoft and Nvidia will commit to invest up to $5 billion and $10 billion respectively in Anthropic, while Anthropic will commit to purchasing $30 billion of Azure compute capacity and up to one gigawatt of compute capacity using Nvidia’s Grace Blackwell and Vera Rubin systems.

In March 2025, Anthropic announced a significant partnership with Databricks, establishing a five-year strategic relationship to integrate Anthropic's models natively into the Databricks Data Intelligence Platform. This partnership provided over 10K companies access to Claude models for building AI agents. In September 2025, Microsoft announced a partnership with Anthropic to integrate Claude into Microsoft’s Copilot, which had been powered solely by OpenAI models until that point.

Anthropic has expanded into government and defense sectors through strategic partnerships. In November 2024, Palantir announced a partnership with Anthropic and Amazon Web Services to provide U.S. intelligence and defense agencies access to Claude models. This marked the first time Claude would be used in "classified environments." In June 2025, Anthropic announced a "Claude Gov" model, which as of June 2025 was in use at multiple US national security agencies. In July 2025, the United States Department of Defense announced that Anthropic had received a $200 million contract for AI in the military, alongside Google, OpenAI, and xAI. In August 2025, Anthropic launched Claude for Government through the US General Services Administration (GSA) OneGov Deal, offering Claude for Enterprise and Claude for Government to all branches of the US government for $1/year.

Enterprise Adoption

Claude is available via Amazon Bedrock, where it serves as an element of the core infrastructure for tens of thousands of customers. Notable enterprise adopters include Pfizer (saving tens of millions in operational costs), Intuit, Perplexity, and the European Parliament (powering a chatbot that analyzes 2.1 million official documents). Other major enterprise customers include Slack, Zoom, GitLab, Notion, Factory, Asana, BCG, Bridgewater, and Scale AI. In July 2025, AWS launched an AI agent marketplace with Anthropic as a key partner, allowing startups to directly offer their AI agents to AWS customers through a single platform.

Anthropic continues to expand its developer platform traction through integrations and partnerships. According to CEO Dario Amodei in November 2024, "Several customers have already deployed Claude's computer use ability," noting that "Replit moved fast." Replit is one of several next-generation IDE companies, alongside Cursor, Vercel, and Bolt.new, that have adopted Claude to support development workflows.